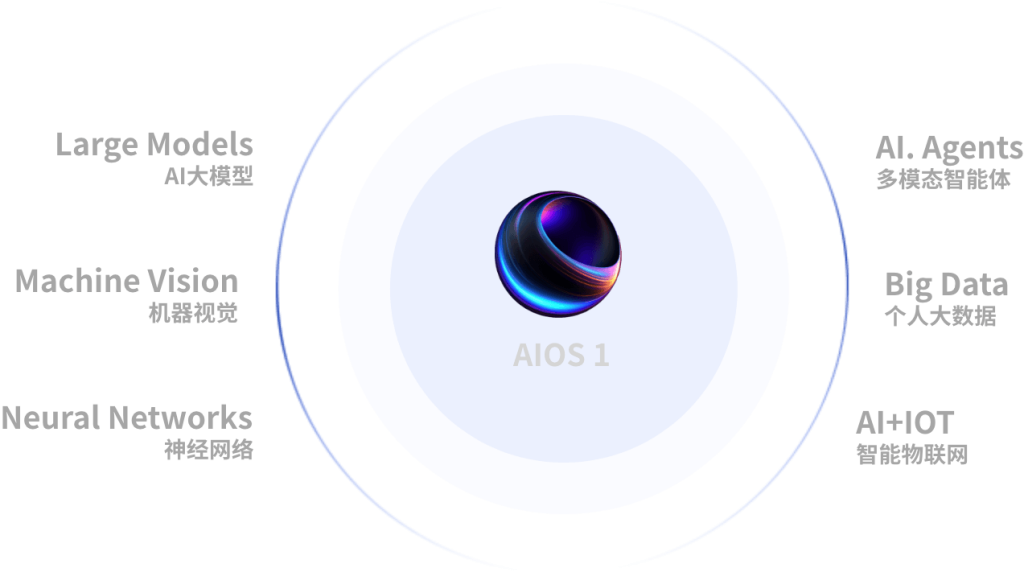

In recent years, the rapid advancement of artificial intelligence (AI) has led to the emergence of multimodal AI-agents, which are designed to process and understand information from multiple modalities, such as text, images, and audio. This evolution has significant implications for various industries, especially as intelligent automation becomes increasingly integrated into business processes. Hugging Face, a leading AI company, is at the forefront of this transformation, providing tools and frameworks that empower developers and organizations to build sophisticated AI solutions. This article explores the trends, applications, and technical insights surrounding multimodal AI-agents and intelligent automation, highlighting the role of Hugging Face in shaping the future of AI.

.

**The Rise of Multimodal AI-Agents**

Multimodal AI-agents are systems that can analyze and interpret data from various sources simultaneously. Unlike traditional AI models, which typically focus on a single type of input, multimodal agents can handle diverse data types, enhancing their understanding and decision-making capabilities. For instance, a multimodal AI-agent could analyze a video, extract relevant audio, and interpret accompanying text to provide a comprehensive understanding of the content.

.

The rise of these agents is driven by the need for more sophisticated AI applications in areas such as customer service, healthcare, and content creation. By leveraging multiple data modalities, organizations can create more engaging and effective user experiences. For example, in customer service, a multimodal AI-agent could analyze a customer’s voice tone, facial expressions from video calls, and text inputs to provide more personalized and context-aware responses.

.

**Intelligent Automation: The Next Frontier**

Intelligent automation combines AI technologies with automation processes to enhance efficiency and decision-making. It allows organizations to streamline operations, reduce costs, and improve service delivery. The integration of multimodal AI-agents into intelligent automation frameworks can significantly enhance their capabilities.

.

For instance, in the manufacturing industry, intelligent automation systems equipped with multimodal AI-agents can monitor equipment performance using visual data from cameras, audio data from machinery sounds, and sensor data. This holistic approach enables predictive maintenance, reducing downtime and operational costs. Additionally, in sectors like finance, multimodal AI-agents can analyze market trends by processing news articles, social media sentiment, and numerical data simultaneously, leading to more informed investment decisions.

.

**Hugging Face: Pioneering Multimodal AI Development**

Hugging Face has emerged as a key player in the AI landscape, providing an open-source platform that simplifies the development of AI models, including multimodal agents. Their Transformers library has gained immense popularity for its user-friendly interface and extensive pre-trained models, enabling developers to build and deploy AI solutions quickly.

.

One of the standout features of Hugging Face is its focus on community-driven development. The platform encourages collaboration among researchers and developers, fostering innovation in the field of multimodal AI. By providing access to a wide range of datasets and models, Hugging Face empowers users to experiment with different architectures and approaches, accelerating the development of multimodal AI-agents.

.

Moreover, Hugging Face’s integration with popular machine learning frameworks such as TensorFlow and PyTorch allows developers to leverage existing tools while building multimodal applications. This flexibility is crucial for organizations looking to implement intelligent automation solutions that require seamless integration with their existing infrastructure.

.

**Trends in Multimodal AI-Agents and Intelligent Automation**

As the field of multimodal AI continues to evolve, several trends are shaping its development and application. One notable trend is the increasing emphasis on explainability and transparency in AI systems. As organizations deploy multimodal AI-agents in critical areas, understanding how these systems make decisions becomes paramount. Researchers are actively working on techniques to enhance the interpretability of multimodal models, ensuring that stakeholders can trust and understand the AI’s reasoning.

.

Another trend is the growing focus on ethical AI. As multimodal AI-agents become more prevalent, concerns about bias and fairness in AI systems have come to the forefront. Organizations are recognizing the importance of developing AI solutions that are not only effective but also ethical and inclusive. Hugging Face is taking steps to address these concerns by promoting responsible AI practices and providing tools for bias detection and mitigation.

.

Additionally, the integration of multimodal AI-agents with edge computing is gaining traction. Edge computing allows data processing to occur closer to the source, reducing latency and bandwidth usage. This is particularly beneficial for applications in industries such as healthcare and autonomous vehicles, where real-time decision-making is critical. By deploying multimodal AI-agents on edge devices, organizations can achieve faster response times and enhance the overall user experience.

.

**Industry Applications of Multimodal AI-Agents**

The applications of multimodal AI-agents span various industries, each benefiting from the enhanced capabilities these systems offer. In healthcare, for instance, multimodal AI can assist in diagnosing medical conditions by analyzing medical images, patient records, and clinical notes simultaneously. This comprehensive analysis can lead to more accurate diagnoses and personalized treatment plans.

.

In the entertainment industry, multimodal AI-agents are revolutionizing content creation. These agents can analyze audience preferences by processing feedback from social media, reviews, and viewing patterns, enabling creators to produce content that resonates with their target audience. Furthermore, in gaming, multimodal AI can enhance player experiences by adapting gameplay based on player behavior, voice commands, and in-game actions.

.

The retail sector is also leveraging multimodal AI-agents to enhance customer experiences. By analyzing customer interactions across various channels—such as social media, in-store visits, and online shopping—retailers can gain insights into consumer behavior, enabling them to tailor marketing strategies and improve customer service.

.

**Technical Insights: Building Multimodal AI-Agents with Hugging Face**

Developing multimodal AI-agents requires a solid understanding of various machine learning techniques and architectures. Hugging Face provides a range of tools and resources that facilitate this process. One key aspect is the use of transformer models, which have proven effective in handling different data modalities.

.

For example, models like CLIP (Contrastive Language-Image Pretraining) enable the simultaneous processing of text and images, allowing for tasks such as image captioning and visual question answering. Hugging Face’s implementation of CLIP provides developers with a straightforward way to incorporate this technology into their applications.

.

Another important consideration is data preprocessing. Multimodal AI-agents require careful handling of diverse data types to ensure optimal performance. Hugging Face offers utilities for data augmentation and preprocessing, enabling developers to prepare their datasets effectively.

.

Furthermore, training multimodal models often requires substantial computational resources. Hugging Face’s integration with cloud platforms allows developers to leverage scalable infrastructure, making it easier to train and fine-tune models on large datasets.

.

**Conclusion: Embracing the Future of AI with Multimodal Agents and Intelligent Automation**

The emergence of multimodal AI-agents represents a significant leap forward in the capabilities of artificial intelligence. As these systems become more sophisticated, their applications across industries will continue to expand, driving innovation and efficiency. Hugging Face plays a pivotal role in this evolution, providing the tools and resources necessary for developers to build intelligent automation solutions that harness the power of multimodal AI.

.

As organizations embrace these advancements, they must also prioritize ethical considerations and transparency in AI development. By doing so, they can ensure that the benefits of multimodal AI-agents are realized while minimizing potential risks. The future of AI is bright, and with the continued collaboration between industry leaders, researchers, and developers, we can expect to see remarkable advancements in the years to come.

.

**Sources:**

1. “The Future of Multimodal AI: Trends and Applications” – AI Trends Journal

2. “Hugging Face: Revolutionizing Natural Language Processing” – TechCrunch

3. “Intelligent Automation: Transforming Business Operations” – McKinsey & Company

4. “Ethical AI: Ensuring Fairness in Machine Learning” – Harvard Business Review

5. “Multimodal Machine Learning: A Survey” – arXiv.org