Introduction: what an AI virtual office space really is

An AI virtual office space is more than a 3D room with avatars. It’s an integrated workspace where conversational agents, automated workflows, and model-driven services work alongside humans to get things done. For a beginner, imagine a persistent online office where an intelligent assistant triages your messages, summarizes meetings, routes documents to the right team members, and triggers downstream automation — all without you manually copying information between tools.

This article is a practical guide that walks three audiences — beginners, developers, and product leaders — through the concrete systems, trade-offs, and operational patterns needed to build and adopt an AI virtual office space. We’ll discuss architectures, orchestration layers, integrations, vendor options, cost and ROI metrics, and governance practices. Throughout, I reference familiar platforms and frameworks so you can map ideas to real tools.

Why this matters now

Teams are distributed; information is fragmented across chat, email, ticketing, and cloud drives. AI-powered automation can reduce repetitive work and surface insights faster. An AI virtual office space stitches those islands into an “intelligent digital ecosystem” where context flows with less friction. The result is faster decisions, fewer missed follow-ups, and measurable productivity gains — but only when the underlying systems are designed for reliability, observability, and compliance.

Beginner’s tour: everyday scenarios

- New hire onboarding: an assistant monitors an onboarding checklist, schedules meetings, pre-fills HR forms, and escalates missing items automatically.

- Meeting management: live transcription, action-item extraction, and automatic task creation in a project tracker after the meeting ends.

- Customer escalation: chat logs are summarized, root-cause detection runs, and corrective workflows are triggered without manual copy-paste.

These scenarios illustrate why a tight integration of conversational AI, workflow orchestration, and RPA-style connectors is the core of a functioning AI virtual office space.

Architectural teardown for engineers

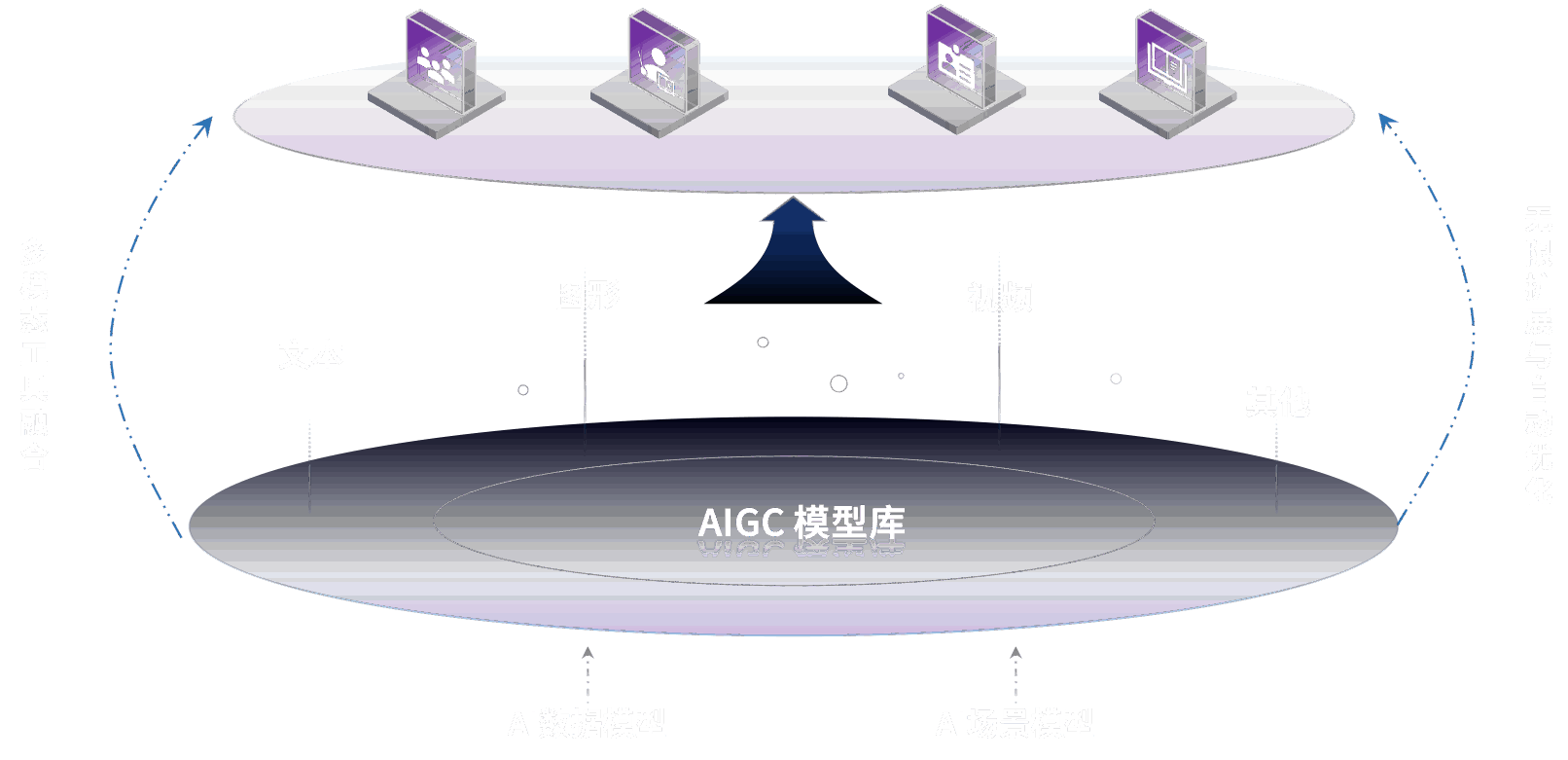

At a systems level, an AI virtual office space typically decomposes into these layers:

- Client and UX layer: web or native clients, spatial/3D interfaces when needed, presence and collaboration features. Real-time protocols (WebRTC, WebSockets) are common.

- Interaction / orchestration layer: agents and workflow engines that decide what to do next. This is where choreographed flows or agent-based architectures (e.g., single-purpose micro-agents vs. monolithic assistants) live.

- Model serving and inference: hosted models or third-party APIs, possibly with local acceleration. Choices include managed model services (e.g., SageMaker, Vertex AI), open-source stacks (Ray Serve, BentoML), or edge execution.

- Integration and connectors: adapters to calendars, CRMs, tickets, document stores, Slack/Teams, and RPA bots for legacy apps.

- Data plane and storage: context stores, vector databases for semantic search (e.g., Pinecone, Milvus), secure blob stores, and event logs.

- Security, governance, and observability: IAM, audit trails, model versioning and testing, metrics, tracing, and policy enforcement.

Patterns: event-driven vs. synchronous

Two common integration patterns are event-driven automation and synchronous request-response. Event-driven is suited for background processes (e.g., summarization, follow-ups). Synchronous patterns serve interactive tasks that require low latency (e.g., live chat responses). For many systems, a hybrid approach is best: synchronous flows for interactive UX and an event bus to handle asynchronous workflows and retries.

Orchestration choices and trade-offs

Workflow engines like Temporal or Prefect provide durable, fault-tolerant orchestration ideal for long-running processes. For simpler routing, webhook-based broker systems or serverless functions suffice. Agent frameworks (LangChain, Semantic Kernel) help compose model calls with external actions, but they introduce complexity around state management and retries. Decide based on:

- Durability requirements: do tasks need guaranteed execution despite restarts?

- Latency targets: is sub-second response necessary?

- Observability needs: can you trace a user’s action end-to-end?

OS-level AI computation integration

One emerging pattern is to leverage device and OS-level capabilities for AI computation to reduce latency and preserve privacy. OS-level AI computation integration means running inference close to the user — on the laptop, mobile, or an edge device — while still maintaining a centralized orchestration plane. This helps for offline availability, lower latency in live interactions, and reduced cloud costs for some workloads.

Considerations include managing model updates, ensuring consistent behavior across clients, and securing local model assets. Techniques such as encrypted model bundles, policy-managed model updates, and fallback to cloud inference are typical. Platforms like Apple’s Core ML, Android’s NNAPI, and emerging frameworks for WebAssembly inference make this pattern practical for certain tasks (short summaries, on-device entity redaction, local embeddings).

Integration patterns and API design

APIs for an AI virtual office space should be designed for composability and observability. Typical API surfaces include:

- Context API: read/write access to user context, conversation state, and semantic memory.

- Action API: safe primitives that trigger external actions (create task, send email), where actions are authorized and auditable.

- Model API: templated calls for generation, summarization, embedding, and classification, with consistent timeout and retry policies.

- Webhook/event API: subscription to lifecycle events (meeting ended, document uploaded) for downstream workflows.

Design your APIs with idempotency, versioning, and clear error semantics. Include correlation IDs to trace a user flow across systems and instrument endpoints with latency and error metrics.

Deployment and scaling concerns

Scaling an AI virtual office space involves both infrastructure for web real-time features and compute for model serving. Key points:

- Autoscale inference independently: separate inference clusters by model size and SLA. Reserve GPU instances for heavy models and use CPU or quantized models for lightweight tasks.

- Cache embeddings and summaries: avoid repeated model calls by caching semantic vectors and computed summaries for active sessions.

- Backpressure and rate limits: implement graceful degradation for spikes, queuing for non-critical tasks, and fair-share policies to prevent noisy users from exhausting quota.

- Cost-aware routing: route low-value tasks to cheaper endpoints (local models or smaller models) and reserve large models for high-value actions.

Observability and reliability

Operational signals that matter:

- End-to-end latency and P95/P99 response times for interactive features.

- Task throughput and queue depth for background workflows.

- Model-specific metrics: token counts, inference time, error rates, and failure causes.

- Business metrics: actions completed per user per day, time-to-resolution, onboarding completion rates.

Implement distributed tracing to debug cross-service flows, and keep an audit trail of automated actions for compliance and rollback. Also monitor drift in model outputs to detect performance degradation or dataset shifts.

Security, privacy, and governance

Security and governance are non-negotiable. Recommendations:

- Least privilege access: fine-grained IAM for connectors and action APIs.

- Data residency controls: ensure storage and model training comply with local regulations (GDPR, CCPA).

- Human-in-the-loop gates: require explicit approval for high-risk actions like financial transfers or personnel changes.

- Model governance: version control models, keep test suites for behavioral expectations, and maintain explainability artifacts.

- Logging and auditability: immutable logs for automated actions, searchable for incident response.

Product and market perspective

From a product standpoint, the value of an AI virtual office space is measured in saved time, reduced error rates, and improved responsiveness. Typical ROI sources include:

- Automation of routine workflows (e.g., expense approvals, onboarding) that frees knowledge workers.

- Faster time-to-decision via summarized context and prioritized action items.

- Reduction in operational errors by automating handoffs between systems.

Vendor landscape: established collaboration platforms (Microsoft Teams with Copilot, Slack integrations), spatial platforms (Gather.town, Virbela), and specialized automation vendors (UiPath, Automation Anywhere) all aim at parts of this space. For model orchestration and MLOps, tools like SageMaker, Vertex AI, BentoML, Ray, and Temporal play roles in productionizing models and workflows. Choose vendors based on integration needs, compliance posture, and whether you prefer a managed or self-hosted approach.

Realistic case study

Consider a mid-size professional services firm that built an AI virtual office space to streamline client onboarding. They combined a lightweight web front-end for meetings, a Temporal-based orchestration engine for onboarding tasks, vector search for knowledge retrieval (Milvus), and a mix of cloud inference for document summarization with small on-device models for local checks. The project reduced time to first billable work by 40% and reduced manual handoffs by 60%.

Key operational lessons: start with high-impact, low-risk workflows; instrument end-to-end metrics early; and stage model complexity — use smaller models first and introduce larger, more costly models only where they demonstrably improve outcomes.

Risks and failure modes

Common pitfalls:

- Overautomation: automating tasks without proper human oversight can cause incorrect actions to propagate quickly.

- Context loss: failure to maintain consistent user context across tools leads to inappropriate suggestions or duplicate work.

- Cost overruns: unbounded model calls or poor caching strategies can inflate cloud bills rapidly.

- Regulatory exposure: poorly controlled data flows can breach compliance boundaries.

Future outlook

Expect tighter integration between workplace platforms and model orchestration. OS-level AI computation integration will permit richer offline and latency-sensitive features, and standards around conversational context and action APIs may emerge to improve interoperability between competing solutions. The concept of an “AIOS” or AI operating layer is gaining traction — a system that manages models, policies, and action interfaces consistently across apps and devices — but it will require cross-industry standards to avoid vendor lock-in.

Practical advice for teams starting today

- Map three high-value workflows with clear success metrics and automate them first.

- Choose a robust orchestration layer (Temporal or similar) if tasks are long-running and require retries.

- Design APIs with idempotency and tracing in mind; observability is part of your product design.

- Apply least-privilege and human-in-the-loop for risky actions. Treat model outputs as suggestions, not mandates.

- Plan for hybrid inference: mix cloud models, smaller local models, and edge execution when latency or privacy matters — an approach that benefits from OS-level AI computation integration.

Key Takeaways

An AI virtual office space can transform collaboration by embedding automation and intelligence directly into daily workflows. Building one requires careful architectural planning: a composable orchestration layer, reliable model serving, secure connectors, and observability across the full stack. Product teams should measure ROI through business metrics, and engineers must balance latency, cost, and governance. As platforms evolve, expect more standardized integrations and deeper device-level AI capabilities that make intelligent digital ecosystems both practical and private by design.