Introduction — why orchestration matters now

Teams building production AI systems face a common gap: models and data pipelines may work independently, but coordinating them reliably across environments, vendors, and changing requirements is hard. AI workflow orchestration is the discipline and set of tools for reliably connecting models, data, services, humans, and external systems into predictable, observable business processes. This article explains the idea simply for newcomers, then dives deep on architecture, integrations, operational signals, and product trade-offs for technical and product leaders.

Simple explanation and a short scenario

Imagine an online bank processing loan applications. A request enters the system, a scoring model checks credit, a fraud model runs, documents are OCR’d and verified, and finally a human underwriter reviews edge cases before approval. Without orchestration you might have ad-hoc scripts, cron jobs, or point-to-point APIs. With proper orchestration you have a system that triggers the right models in order, handles retries and timeouts, logs decisions for audit, and scales parts of the pipeline independently.

For non-technical readers: think of orchestration like a conductor for a symphony — each musician (service) has a part, and the conductor ensures timing, coordination, and graceful recovery if someone misses a cue.

What is AI workflow orchestration?

At its core, AI workflow orchestration is the practice of defining, scheduling, running, and observing multi-step processes that include model inference, data transformations, conditional logic, external API calls, and human steps. It covers both batch and real-time scenarios and spans tools for authoring workflows, executing tasks, and governing behavior.

Common orchestration features include dependency graphs, retry policies, state management, event triggers, versioning, and integration adapters for model serving and data systems.

Real-world domains and use cases

- Customer support automation: route tickets, run summarization and intent detection models, escalate to an agent when confidence is low.

- Personalization and content pipelines: collect signals, generate candidate recommendations, re-rank using contextual models, and deploy updates via feature flags.

- Document-heavy workflows: ingest, OCR, extract entities, validate with knowledge bases, and hand off to specialists for low-confidence cases.

- Scientific discovery: chain simulations and models, include human-in-the-loop validation, and manage long-running jobs that may include components labeled AI-powered quantum AI experiments.

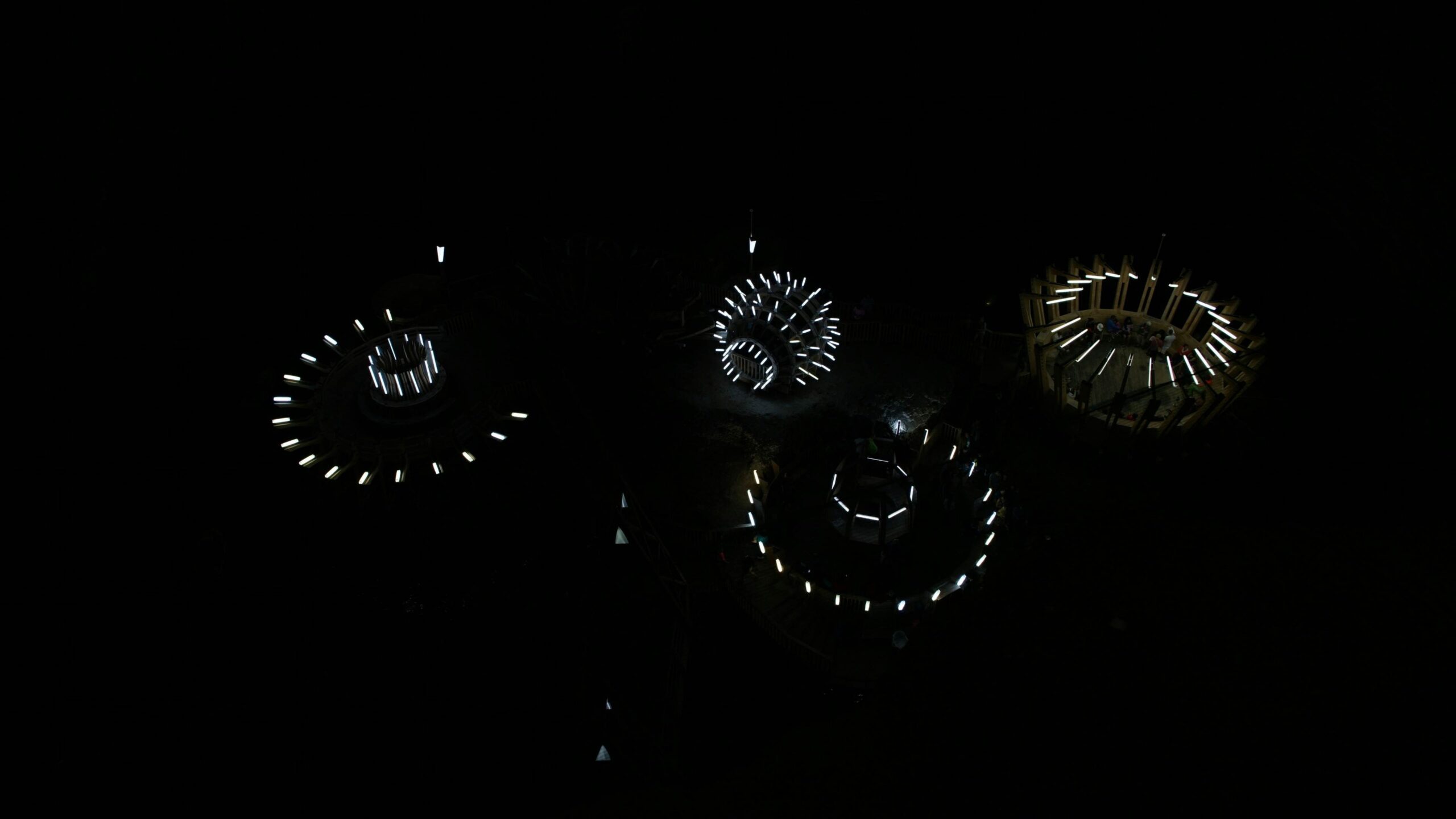

- Multimodal product flows: coordinate image, audio, and text models for AI multimodal applications like automated video tagging or cross-modal search.

Architectural patterns for engineers

When designing orchestration, separate concerns into three planes: the control plane (workflow definitions, scheduling, policy), the data plane (payloads, model inputs/outputs, artifacts), and the execution plane (workers, model servers, external adapters). This separation helps reason about failures, scaling, and security.

Centralized vs decentralized orchestration

Centralized platforms (Airflow, Dagster, Apache NiFi) give a single place to author and observe workflows. They simplify governance but can become a single point of failure and may struggle with ultra-low-latency paths. Decentralized approaches (service meshes, event-driven architectures with Kafka or Pulsar) favor resilience and locality but often raise complexity in global visibility and consistency.

Event-driven vs synchronous orchestration

Synchronous pipelines are easier to reason about end-to-end and are common for short-lived APIs. Event-driven orchestration is better for high-throughput, loosely coupled systems and long-running processes. Hybrid models are typical: user-facing flows use synchronous calls to the orchestration control plane which then triggers asynchronous background steps for heavy batch tasks.

Stateful workflows and checkpoints

Long-running AI processes (e.g., multi-step human review or iterative model training) require durable state, retries with idempotency, and checkpoints to resume processing. Platforms like Temporal and Argo Workflows provide built-in durable execution semantics; others rely on external stores (Redis, databases, object storage) and careful idempotent operations.

Integration and API design choices

Integrations are the friction points in orchestration. Good patterns include:

- Stable, versioned task contracts for model inputs and outputs to avoid downstream breakage.

- Lightweight adapters for model servers (Triton, TorchServe, BentoML, Seldon) that normalize inference calls and metrics.

- Event schemas (Avro/Protobuf/JSON Schema) and tracing headers to link events across systems for observability.

- Clear SLAs for deterministic vs non-deterministic tasks; non-deterministic steps (e.g., generative models) should include confidence and provenance metadata.

Deployment, scaling, and cost trade-offs

Decide early whether to use managed services (AWS Step Functions, Google Workflows, Azure Durable Functions) or self-hosted platforms (Airflow, Argo, Temporal). Managed services remove ops overhead but can be more expensive and less flexible. Self-hosted solutions provide control and lower cost at scale but demand SRE investment.

Key metrics and signals:

- Latency percentiles for task execution (p50, p95, p99). Low-latency inference requires co-locating model servers with the orchestrator or using fast RPC.

- Throughput (tasks/sec) and compute utilization. Batch jobs often favor autoscaling GPUs and spot instances; real-time paths need stable CPU/GPU capacity and warm pools.

- Cost per inference and cost per end-to-end transaction. Track both to optimize for business outcomes.

Observability, testing, and failure modes

Observability combines traces, metrics, and logs. Correlate workflow IDs across traces and collect service-level metrics like task duration, retry counts, queue lengths, and business outcomes. Use OpenTelemetry to propagate context.

Common failure modes:

- Silent data drift: models degrade because feature distributions shifted. Use model monitoring and data quality checks to detect drifts early.

- Retry storms: improper retry backoff causes cascading load. Implement exponential backoff and circuit breakers.

- State corruption: non-idempotent tasks or partial writes break workflows. Prefer idempotency keys and atomic checkpoint writes.

Security and governance

Apply least privilege to connectors and model stores. Use policy engines like Open Policy Agent for runtime governance and maintain model cards and lineage metadata via tools like MLflow or Feast. For regulated industries, keep an immutable audit trail of inputs, model versions, and decisions for explainability and compliance.

Product and market considerations for decision-makers

Evaluate orchestration platforms based on integration breadth, governance features, operational cost, and vendor lock-in risk. Managed platforms accelerate time-to-value; self-hosted stacks are better when you need custom execution semantics or have strict data residency requirements.

ROI measures to track:

- Reduction in mean time to deploy (MTTD) for model updates.

- Decrease in manual handoffs and operational overhead.

- Business KPIs improved by automation (e.g., approvals per day, customer satisfaction scores).

Vendor and open-source landscape

Notable open-source and commercial players shape the ecosystem: Apache Airflow and Dagster are popular for data-centric DAGs; Argo Workflows integrates tightly with Kubernetes; Temporal provides durable workflow primitives; Kubeflow focuses on ML pipelines; Ray offers distributed task execution. For model serving and inference, Seldon, KServe, Triton, and BentoML are common choices. Managed cloud options include AWS Step Functions, Google Cloud Workflows, and Azure Logic Apps.

Choose tools based on whether you need strong orchestration primitives, native support for model lifecycle, or deep Kubernetes integration.

Case study snapshots

Retail personalization platform

A mid-size retailer used an orchestration layer to coordinate data ingestion, nightly model retraining, real-time scoring, and human review for ambiguous recommendations. The team moved from scripted cron jobs to Dagster with managed model serving, lowering failed runs by 70% and cutting time-to-deploy new models from weeks to days.

Financial underwriting

A bank implemented Temporal for long-running, auditable loan workflows that combined rule-based checks, multiple model inferences, and manual underwriter approvals. The durable execution model reduced error-prone resubmissions and improved auditability, supporting regulatory compliance.

Operational pitfalls and how to avoid them

- Avoid tightly coupling orchestration to a single model implementation; keep model interfaces stable and versioned.

- Don’t mix high-throughput inference with heavy batch training on the same cluster without resource isolation.

- Plan for human steps: include explicit SLAs and reminders for human-in-the-loop tasks to prevent stuck workflows.

Future trends and emergent areas

Expect orchestration platforms to adopt richer agent-style patterns and built-in support for multimodal workloads. As organizations experiment with specialized hardware and hybrid experiments like AI-powered quantum AI research, orchestration systems will need to schedule heterogeneous resources and include provenance for complex scientific workflows. AI multimodal applications will push orchestration to become more flexible about task payloads, handling images, audio, and structured data together inside a single workflow.

Choosing an approach: practical checklist

Before selecting a platform, answer these questions:

- Do you need sub-second response times or is eventual consistency acceptable?

- Are your workflows short-lived (minutes) or long-running (hours to days)?

- What governance, audit, and compliance needs drive platform choice?

- Do you prefer managed services to reduce ops burden or in-house control for customization?

Key Takeaways

AI workflow orchestration is the backbone that turns models and datasets into reliable business processes. For beginners, think of it as the conductor that coordinates models, humans, and data. For engineers, it demands careful separation of control, data, and execution planes, durable state management, and robust observability. For product leaders, it’s a lever to improve ROI by reducing manual work, improving model deployment velocity, and ensuring compliance. Evaluate platforms by latency needs, scale, governance, and operational maturity, and plan for future needs such as heterogeneous hardware scheduling and multimodal workloads.