Introduction: an old idea for new automation

Imagine a workshop with hundreds of tools and thousands of possible tool-chains. You want the fastest, cheapest, and most reliable way to finish a job without testing every combination. Genetic algorithms do exactly that: they evolve candidate solutions by favoring the fittest, recombining them, and introducing variation. When applied to automation systems, this evolutionary approach can find robust, unexpected solutions to scheduling, routing, orchestration, and policy tuning problems.

This article focuses on Genetic algorithms in AI as a practical theme: how they fit into modern automation platforms, where they shine, how to design safe production systems around them, and what organizations should measure to decide whether to adopt them.

Why genetic algorithms matter to automation

Genetic algorithms are derivative-free optimizers that work well when the search space is large, discontinuous, or noisy. They are complementary to gradient-based learning and can be hybridized with neural approaches. For example, pairing genetic search with sequence models such as Long Short-Term Memory (LSTM) models lets you both generate candidate action sequences and evaluate long-horizon behaviors.

Real-world scenarios where genetic approaches add value:

- Workflow optimization: evolve schedules and task assignments to minimize response time under changing load.

- Robust configuration tuning: find hyper-parameters for complex pipelines where metrics are noisy and non-differentiable.

- Adaptive cybersecurity policies: evolve rule-sets or detection thresholds to reduce false positives while preserving coverage in AI-driven cybersecurity systems.

- Agent orchestration: evolve coordination strategies for multi-agent systems to improve throughput or resilience.

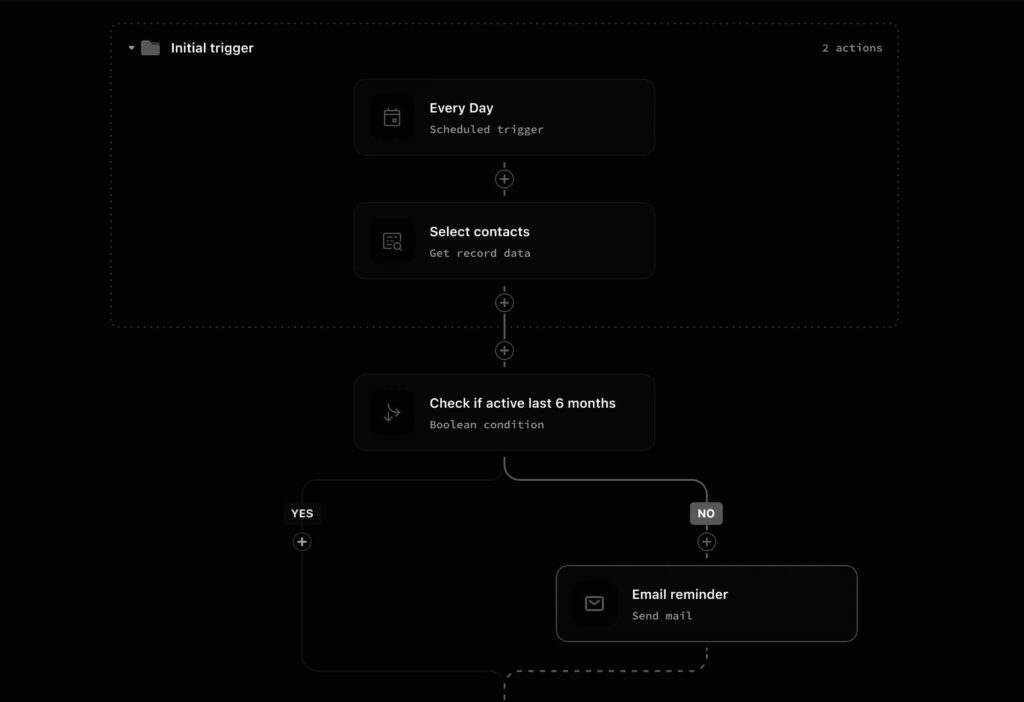

System architecture patterns

Deploying genetic algorithms inside an automation stack usually follows a few recurring patterns. Choose a pattern by workload characteristics: how expensive is each fitness evaluation, how often does the environment change, and whether actions must run synchronously.

Centralized evolutionary engine

A single evolutionary orchestrator holds populations, scores, and mutation logic. Workers evaluate individuals and return fitness scores. This pattern is simple and works when evaluations are moderately expensive and network latency is predictable.

Distributed and island models

For expensive evaluations or high-throughput needs, use an island model: multiple sub-populations evolve independently, occasionally exchanging top individuals. This reduces synchronization overhead and improves exploration. Kubernetes jobs, Ray actors, or managed batch clusters are common ways to run islands in parallel.

Hybrid ML-assisted fitness

Integrate a learned surrogate model to approximate fitness. For sequence-heavy decisions, a Long Short-Term Memory (LSTM) model can predict long-term rewards, letting the evolutionary loop evaluate many candidates cheaply before committing to full simulation. Surrogates reduce cloud costs but introduce bias and must be validated frequently.

Synchronous vs. event-driven evaluation

Synchronous evaluation means a generation waits until all individuals are scored — valuable for strict selection schemes, but costly when a few evaluations are slow. Event-driven or asynchronous evolution accepts scores as they arrive, keeping resources utilized. Asynchronous designs require careful bookkeeping to avoid selection biases.

Key integration and deployment choices

Choosing managed versus self-hosted influences operational burden and control:

- Managed platforms (cloud vendor orchestration, managed ML platforms) simplify provisioning, autoscaling, and compliance, but may limit flexibility in custom crossover or mutation operators.

- Self-hosted stacks (Kubernetes, Ray, Argo Workflows, custom serverless) give full control over topology and scheduling but require expertise in scaling, fault tolerance, and monitoring.

Deploying genetic algorithms in safety-sensitive contexts demands stronger governance. Keep versioned records of population seeds, fitness function definitions, and model checkpoints to enable auditing. For regulated environments, maintain a deployed policy registry and an approval workflow for candidate strategies.

Tools and platforms to consider

There are mature open-source libraries and orchestration layers that accelerate building production systems. For evolutionary algorithms, projects like DEAP, Jenetics, and ECJ provide algorithm building blocks. For large-scale experimentation and tuning, Ray Tune, Optuna, and Microsoft NNI support evolutionary search as search strategies. For orchestration and workflow automation, consider Airflow, Argo, Prefect, or managed alternatives like AWS Step Functions.

When your fitness function depends on models, integrate with model serving and MLOps platforms — TensorFlow Serving, TorchServe, or Vertex AI — and keep the entire pipeline reproducible with data versioning tools and CI/CD for models. Standards such as model cards and data sheets aid explainability and compliance.

Implementation playbook (step-by-step in prose)

This is a practical roadmap to deploy genetic algorithms in an automation context.

- Define the objective and success metrics. Decide whether the goal is minimizing cost, maximizing throughput, improving reliability, or balancing multiple KPIs. Translate these to a scalar fitness or a multi-objective function with clear trade-offs.

- Design genotype and phenotype. Choose a compact representation (bitstring, vector of parameters, sequence of actions) and ensure operators (crossover, mutation) produce valid, safe candidates.

- Build the fitness pipeline. If evaluations are expensive, instrument a surrogate model or stepwise evaluation (quick heuristics first, full simulation later). Consider using Long Short-Term Memory (LSTM) models for sequence prediction inside the fitness function when long-horizon behavior matters.

- Select population size and evolutionary parameters. Start conservative and use offline tuning; track convergence speed and diversity. Implement mechanisms to prevent premature convergence: niching, increased mutation, or injecting random individuals.

- Parallelize safely. Use a queue-driven worker pool or island model. Ensure idempotency and checkpointing for long-running evaluations.

- Validate and sandbox before production. Run A/B tests, and maintain rollback strategies. In AI-driven cybersecurity, this means deploying evolved rules to shadow mode and measuring false positive/negative tradeoffs before full enforcement.

- Monitor continuously. Log fitness trajectories, compute diversity metrics, resource usage, and stopped evaluations. Configure alerts for anomalous drift, suspiciously good fitness (data leakage), or runaway resource consumption.

Operational signals, costs, and common failure modes

Track these signals to judge system health and ROI:

- Latency and throughput: average time per fitness evaluation, and evaluations per minute. These determine provisioning and cost projections.

- Convergence curves: how fitness improves per generation, and whether improvement stalls (sign of local optima).

- Resource costs: CPU/GPU hours per generation and per deployment, and cloud storage for checkpoints.

- Diversity metrics: genotype entropy or population variance to detect premature convergence.

- Operational errors: failed evaluations, inconsistent reproducibility, and timeouts that bias selection.

Typical failure modes include noisy or misleading fitness signals, overfitting to simulation tests, and insufficient guardrails that produce unsafe candidates. To mitigate, add conservative constraints, incorporate human-in-the-loop checks for high-impact decisions, and use ensemble validations.

Security, governance, and AI policy implications

Governance is essential when automation decisions affect customers or critical infrastructure. Maintain audit trails for all evolved candidates, record fitness definitions, and require approvals for deployment. Under regulatory regimes such as GDPR and the EU AI Act, automate-only decisions that materially affect individuals will need transparency and contestability.

Security considerations are twofold: genetic algorithms can harden systems (e.g., evolving adaptive defenses) but can also be used by attackers to discover evasion strategies. In AI-driven cybersecurity, this arms race means you should adversarially test your evolved policies and monitor for unexpected exploitation pathways.

Case studies and ROI signals

Example 1: A logistics operator used genetic algorithms to evolve route batches and time windows. By combining an evolutionary scheduler with simulation-based fitness, they reduced fuel costs by 6–9% and improved on-time deliveries. The key ROI signal was cost-per-delivery and reduced idling time.

Example 2: A security team paired genetic search with an LSTM-based sequence scorer to evolve detection rules. Running evolved configurations in shadow mode reduced false positives by 40% while preserving detection recall. Operational gain came from analyst time saved and fewer escalations.

These case studies show where genetic solutions outperform manual tuning: when objectives are complex, topologies change frequently, and simulations capture meaningful performance signals.

Vendor choices and trade-offs

Pick a vendor or stack based on these dimensions:

- Flexibility vs convenience: if you need custom operators or privacy-preserving environments, self-host. If speed-to-market and compliance are priorities, managed platforms are attractive.

- Cost predictability: managed services provide billing transparency, while self-hosted solutions can be optimized for spot pricing but require ops overhead.

- Integration with existing MLOps: choose platforms that support your model-serving stack and CI/CD pipelines to avoid brittle glue code.

Future outlook

Expect tighter hybrids: evolutionary search will increasingly leverage neural surrogates and sequence models, including Long Short-Term Memory (LSTM) models and transformers, to reduce evaluation costs. Agent frameworks and orchestration layers will incorporate evolutionary modules for policy discovery, and open-source tooling will continue to add distributed primitives matched to cloud-native platforms.

Regulatory focus on explainability and auditability will shape adoption. Organizations that build transparent, auditable evolutionary pipelines will win in regulated industries.

Key Takeaways

Genetic algorithms in AI are a practical, production-grade approach for many automation challenges when the search space is complex and gradient methods are unsuitable. They pair well with sequence models for long-horizon evaluation and can be scaled using island models, surrogate models, and cloud-native orchestration. The right choice between managed and self-hosted systems depends on control, cost, and compliance needs. Measure latency, throughput, diversity, and cost to evaluate ROI, and design strong governance for safety and regulatory compliance — particularly when algorithms affect security or individual outcomes. Finally, treat evolutionary systems as live systems: monitor drift, validate frequently, and include human oversight for high-risk deployments.