Businesses are moving from dashboards to continuous automated insight. An AI operating system that orchestrates models, data, and actions — an AIOS for business intelligence — turns raw telemetry into decisions that execute across systems. This article walks beginners, developers, and product leaders through what a practical AIOS looks like, how to build and operate one, and the trade-offs you’ll encounter.

Why an AIOS for business intelligence matters

Imagine a regional retail chain. Instead of analysts running nightly reports and emailing managers, the system detects a surge in demand for a SKU, predicts stockouts, recommends replenishment orders, and triggers supply-chain workflows automatically. That continuous loop — ingest data, infer, recommend, execute, and monitor — is the operational promise of an AIOS for business intelligence.

For readers new to the topic: think of an AIOS as the operating system for decision automation. It glues together data pipelines, model serving, business rules, and downstream actions in a reliable, observable way. The goal is not to replace humans but to scale routine, high-volume decisions and highlight exceptions that need human attention.

Core architecture — the components of a practical AIOS

A usable architecture blends orchestration, model serving, data storage, and integrations. Typical components include:

- Event and data buses (Kafka, Pulsar) for streaming telemetry and change-data-capture.

- Data lake and feature store for history and engineered features (Delta Lake, Snowflake, Feast).

- Vector stores for retrieval-augmented workflows (Pinecone, Milvus, Weaviate) that support narrative-style insights and similarity search.

- Model serving and inference platforms (Triton, BentoML, TorchServe) that handle batch and real-time endpoints.

- Orchestration and workflow engines (Airflow, Dagster, Prefect, Kubeflow) to schedule pipelines and guardrails.

- Policy and action layer that maps model outputs to automated actions (RPA tools like UiPath or event triggers that call downstream APIs).

- Observability, lineage and governance (OpenTelemetry, Prometheus, Grafana, MLflow, Great Expectations).

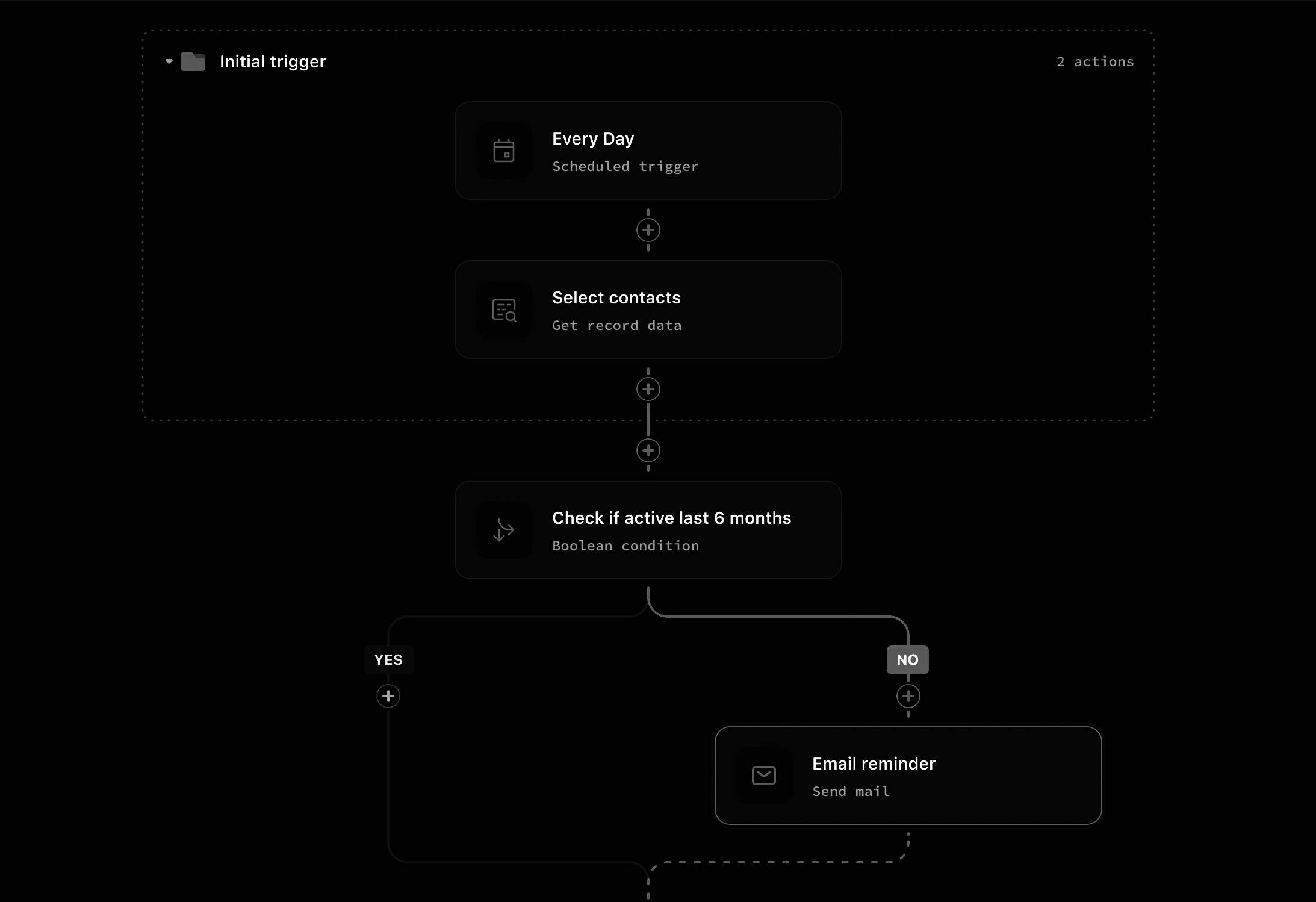

Two patterns dominate integrations: synchronous request-response for real-time decisions, and event-driven automation for high-throughput, loosely-coupled workflows. Both patterns can coexist inside a single AIOS; the orchestration layer manages which model and which action run for each pattern.

How AI knowledge graphs fit in

AI knowledge graphs provide contextual grounding for BI workflows. They connect product hierarchies, customer relationships, supplier contracts, and temporal events so models can reason beyond feature vectors. In practice, knowledge graphs are used to disambiguate entities in retrieval-augmented generation, feed graph neural nets for relationship-aware predictions, and drive explainability for automated recommendations.

Don’t treat a knowledge graph as an optional add-on: in many BI scenarios it reduces false positives (for instance, confused SKUs or merged customer records) and shortens the time to insight when exploring causal relationships across domains.

Implementation playbook — step-by-step in prose

1. Define clear use cases and KPIs

Start with a narrow set of decisions and measurable outcomes: reduce stockouts by X%, automate credit decisions for low-risk customers, or cut report generation time by Y hours. Map the stakeholders who will act on automated outcomes and the signals they need.

2. Inventory data and lineage

Catalog data sources, identify latency constraints, and capture lineage. Business intelligence data often spans transactional systems, CRM, marketing platforms, and third-party feeds. Early investment in lineage and schema contracts prevents surprises during rollout.

3. Prototype with hybrid patterns

Build a minimal working prototype that uses both event-driven and synchronous flows. For example, an overnight batch model generates demand forecasts while a real-time fraud detector runs synchronously at transaction time. Use lightweight orchestration and a vector store for rapid iteration.

4. Add guardrails and observability

Instrument data quality checks, model performance metrics, and business SLOs. Define rollback and escalation paths. Monitoring should track latency, throughput, distributional drift, and the business metric linked to each automation.

5. Iterate and scale

Move from pilot to production by improving automation reliability, implementing role-based access, and integrating with RPA or service APIs for action execution. Decide which elements you want managed and which you need in-house for compliance.

For developers and engineers: architecture and scaling considerations

Designing an AIOS requires trade-offs. Here are practical decisions you’ll face and patterns to follow.

Orchestration vs choreography

Centralized orchestration (a workflow engine controlling each step) simplifies visibility and retry semantics. Choreography (event-driven microservices) scales better and decouples teams, but increases the complexity of tracing and consistency. Many teams use a hybrid: orchestration for critical chains and choreography for elastic, best-effort flows.

Serving: monoliths vs modular pipelines

Monolithic serving endpoints that bundle preprocessing, model inference, and postprocessing simplify deployment and reduce network cost, but are harder to scale independently. Modular pipelines let you scale compute-heavy model nodes (GPUs) separately from lightweight business logic, at the cost of additional RPC latency and complexity. Consider co-locating latency-sensitive components on the same host to cut round-trips.

Latency, throughput, and cost models

Define SLOs for tail latency. Real-time scoring often requires sub-100ms responses; this drives choices toward optimized inference runtimes, CPU inference, or warm GPU pools. Batch scoring optimizes cost per prediction by amortizing GPU use. Track cost metrics at the model and endpoint level — include egress, storage, and orchestration costs.

Deployment and hardware

Kubernetes remains the de facto platform for scaling inference, but serverless options reduce ops burden. Managed services speed time-to-market; self-hosting provides control over data residency and cost. For teams exploring accelerators, be aware of footprint differences between GPUs and TPUs. If your roadmap includes experimental hardware, note that Quantum computing hardware for AI remains exploratory for most BI workloads and is not yet a production lever for typical inference tasks.

Observability and model governance

Instrument feature distributions, prediction histograms, latency percentiles, and business impact metrics. Implement audit logs that link inputs to outputs, decisions, and downstream actions. For regulated domains, add explainability layers and model cards so reviewers can validate logic and fairness.

For product and industry leaders: ROI, vendor choices, and risk

When making procurement decisions, weigh three dimensions: velocity (how fast you can ship), control (data and compliance), and cost. Managed AIOS offerings reduce setup time but may constrain integration patterns or store data in vendor clouds. Self-hosted stacks let you optimize costs and meet strict data residency rules, but increase operational overhead.

Comparing vendors and open source

Vendor platforms (Databricks, Snowflake with integrated AI features, Microsoft Fabric, or specialized AI orchestration platforms) bring integrated pipelines, governance, and managed model serving. Open-source building blocks (Airflow, Dagster, Ray, LangChain, Hugging Face ecosystem, vector DBs) allow bespoke stacks and avoid vendor lock-in but require integration effort.

Measuring ROI and operational impact

ROI is rarely just model accuracy. Include cycle time reductions (analyst hours saved), avoided costs (reduced stockouts or fraud losses), and revenue impact (better targeting). Track adoption metrics: percent of automated recommendations accepted, human interventions per week, and false positive rates. Small gains in percent-level business KPIs can justify the engineering investment quickly when automation runs at scale.

Operational challenges and governance

Common operational pitfalls include brittle data contracts, drift without automated retraining, and insufficient incident response plans for automated actions. Establish review cadences, access controls, and escalation processes. Ensure legal and privacy teams review automated decisions that affect customers, and instrument explainability before broadening scope.

Case study: retail chain automates inventory decisions

A mid-size retailer built an AIOS for business intelligence to automate replenishment and localized promotions. The team started with a pilot: an overnight demand model combined with in-store telemetry to recommend purchase orders. They added a real-time anomaly detector to flag sudden demand shifts due to local events.

Architecture choices included a feature store for sales history, a vector store for product similarity, model serving via a managed inference service, and an orchestration layer (Dagster) to run scheduled jobs and retries. They integrated RPA to push approved orders to their ERP. Within six months they reduced manual replenishment effort by 60% and cut stockout incidents in pilot stores by 20%.

The biggest lessons were non-technical: investing in product catalog hygiene and clear exception workflows paid dividends. The knowledge graph that connected SKUs, suppliers, and regional stores substantially improved recommendation precision.

Future outlook and pragmatic innovation

As model capabilities expand, expect AIOS to embed more decision logic and richer multimodal signals. However, innovation will come more from integration patterns — better model lifecycle automation, solid lineage, improved observability, and dependable action execution — than from single-model breakthroughs. Research into Quantum computing hardware for AI continues, but most business intelligence tasks will remain on classical accelerators for the foreseeable future.

Open-source projects and standards for model governance, data lineage, and agent interoperability will gain traction. Teams that build modular, observable systems will outperform those chasing monolithic, closed solutions.

Next Steps

Practical first moves:

- Run a narrow pilot with clear KPIs and an instrumentation plan.

- Choose an orchestration approach: start centralized for visibility, then decouple high-throughput pipelines.

- Invest in lineage and small-scale knowledge graphs to improve entity resolution early.

- Design SLOs that lift both system reliability and business outcomes.

- Evaluate managed vendors for speed-to-market and open-source stacks when control is essential.

Key Takeaways

An AIOS for business intelligence is less about a single model and more about reliable, observable decision workflows. Prioritize data, governance, and integration patterns to realize real ROI.

Building an AIOS for business intelligence is an engineering and product program, not a one-off model project. With careful architecture, clear KPIs, and robust governance, teams can automate high-volume decisions safely and scale insights from a few reports to continuous, actionable intelligence.