Automatic media production — images, video, audio, and multimodal assets — is moving from one-off experiments into mission-critical infrastructure. This article walks through what an AIOS automatic media creation platform looks like in practice: core concepts explained for beginners, architecturally detailed patterns for engineers, and market and operational guidance for product and business teams.

Why AIOS automatic media creation matters

Imagine a small marketing team that needs localized banner images for 100 markets every week. Traditionally this requires designers, revisions, and file management. With an AI operating system for automatic media creation, the same team defines templates, sets brand constraints, and the system generates assets at scale, applying localization, A/B variations, and delivery to CDNs.

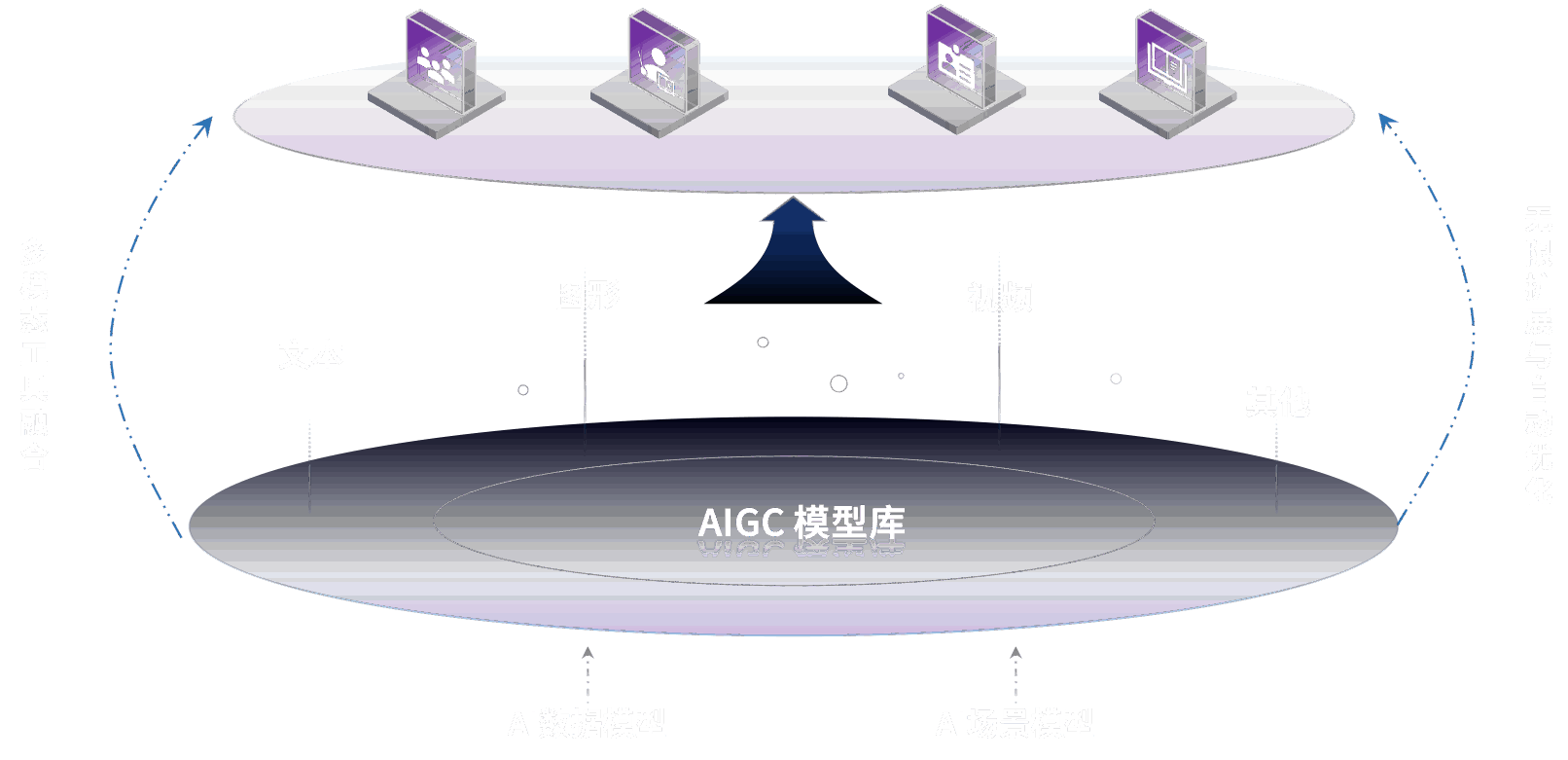

At a high level, an AIOS focused on automatic media creation automates the end-to-end lifecycle: prompt or template authoring, model selection, orchestration of preprocessing and postprocessing, validation and human-in-the-loop checks, and asset delivery and cataloging. It’s not just model inference — it’s an operational stack that treats media generation as a repeatable, governed service.

Core components explained for beginners

- Input layer: Where prompts, templates, and metadata enter the system. Inputs can be manual, triggered by events, or batch uploads from CMS or DAM systems.

- Orchestration layer: The AIOS scheduler and workflow engine that sequences steps: text normalization, model selection, image generation, video rendering, quality checks, and distribution.

- Model inference layer: The set of models (vision, audio, text-to-image, text-to-speech) with options for hosted endpoints or self-hosting.

- Postprocessing and validation: Style transfer, format conversions, watermarking, brand compliance checks, and human-review queues.

- Storage and delivery: Media asset storage, CDN integration, and metadata indexing for search and reuse.

- Governance and observability: Audit logs, provenance metadata, content lineage, and monitoring for quality and misuse.

Architectural patterns for developers and engineers

There are multiple ways to assemble an AIOS for automatic media creation; common patterns are event-driven pipelines, synchronous API flows, and hybrid approaches.

Event-driven pipelines

Triggering media generation from events (new product published, campaign start) works well for asynchronous workloads. An event bus (Kafka, Pulsar) fans out messages to workers that run composed steps using workflow engines like Argo Workflows, Prefect, or Ray Serve. Advantages: resilience, retry semantics, and natural scalability. Trade-offs: higher operational complexity and eventual consistency in delivery.

Synchronous API flows

For interactive authoring experiences, a synchronous API is preferred. Frontends call an orchestration API that routes to a model endpoint and returns generated assets or previews. This requires tight latency budgets and often a layered approach: fast lightweight models for previews, then background high-fidelity generation. Again, the engineering trade-off is between UX and compute cost.

Hybrid orchestration

Many systems mix both: an immediate preview via cheap inference and a background job for final render and distribution. This pattern improves perceived latency while retaining the robustness of asynchronous processing.

Model choices and integration strategies

Media creation uses a mix of models: text-to-image, image refinement, speech synthesis, and sequence models for narratives. Teams must decide between managed inference (cloud endpoints from Hugging Face, OpenAI, or commercial vendors) and self-hosted models using frameworks like KServe, Triton, or Ray.

Key integration considerations:

- Model versioning and registry (MLflow, private model registries).

- Latency vs quality trade-offs: generator models (diffusion) produce higher quality but need more compute; smaller models provide instant previews.

- Input-conditioning and prompt engineering: store templates and prompt histories to reproduce outputs and iterate on quality.

Operational concerns: deployment, scaling, and cost

Automatic media creation systems are compute- and storage-intensive. Design decisions here directly affect cost and scalability.

- Autoscaling inference: Use pooled GPUs for bursty jobs, scale to CPU workers for cheap preprocessing. Managed services reduce ops but increase per-inference cost.

- Batch vs real-time inference: Batch generation can amortize expensive model warm-ups; real-time needs warm pools and larger budgets.

- Storage costs: Raw assets, intermediate files, and multiple versions multiply storage needs. Use lifecycle policies: keep thumbnails and final assets, archive or deduplicate intermediates.

- Cost models: Track cost per asset, cost per minute of GPU time, and storage/egress. Build pricing or internal chargeback around these signals.

Observability, monitoring, and failure modes

Monitoring must cover both infrastructure and content quality. Instrument metrics like p50/p95 latency, throughput (assets per minute), GPU utilization, retry rates, and degradation in perceptual quality measured with task-specific metrics.

Common failure modes include:

- Model degradation after a version bump — track output quality via automated visual tests and human sampling.

- Latency spikes from cold-started GPU instances — mitigate with warm pools and progress streaming for large renders.

- Pipeline poisoning where bad templates or prompts cascade — validate inputs, quarantine failing templates, and add user-level rate limits.

Security, governance, and compliance

Automatic media creation raises intellectual property, privacy, and safety concerns. Governance needs to be baked into the AIOS surface:

- Audit trails for generated assets and the prompts/templates that produced them.

- Policy engines that block or flag outputs that violate brand rules or regulatory constraints.

- Content provenance metadata to indicate synthetic origin for downstream consumers.

- Model access controls and secrets management for hosted APIs and self-hosted clusters.

Regulatory attention to synthetic media is increasing; incorporate watermarking and clearly label assets to reduce legal risk.

Quality safety: defenses and adversarial awareness

As platforms scale, they also become targets for adversarial inputs. Teams should model and mitigate threats from adversarial manipulation and misuse. Research in areas like adversarial detection and robust training is relevant here — for example, methods associated with AI adversarial networks focus on creating perturbations that degrade model outputs. Defensive strategies include adversarial testing during CI, input sanitization, and human review gates for high-risk outputs.

Case study: a retail use case

Consider a retailer that needs localized promotional videos and product photos. They built an AIOS automatic media creation pipeline that:

- Accepts product feeds and regional rules from the PIM (product information management) system.

- Triggers an event-driven workflow to generate hero images, short promotional clips, and localized voiceovers.

- Runs automated compliance checks (brand color, prohibited content) and queues borderline cases for human review.

- Indexes assets with embeddings for reuse and A/B testing, and publishes to the CDN with lifecycle policies for seasonal content.

Benefits realized: shortened production timelines, a 60% reduction in manual design effort for campaign assets, and measurable lift in localized click-through rates. Operational lessons: early investment in template hygiene and strict schema validation prevented thousands of failed renders.

Vendor choices and open-source building blocks

Teams typically combine managed and open-source tools. Common choices include:

- Workflow engines: Argo Workflows, Prefect, or Tekton for orchestration.

- Model serving and scaling: Ray, KServe, Triton, or managed cloud inference endpoints.

- Embedding and search: Open-source vector stores like Milvus or hosted options like managed Weaviate.

- Metadata and lineage: MLflow or internal model registries.

Newer entrants and ongoing launches have improved the hosted experience for media-oriented models: Hugging Face and cloud vendors now offer image and video inference endpoints, while frameworks such as LangChain simplify prompt orchestration. Teams should weigh managed convenience against control and cost — self-hosted gives lower per-inference costs at scale but requires GPU ops expertise.

Where conversational AI fits in

Multimodal systems increasingly tie media generation to conversational experiences. Models like Qwen in conversational AI illustrate how large conversational models can drive context-aware media creation — generating images or narration based on dialogue, or accepting feedback mid-generation. Integration patterns include exposing a conversational orchestration layer that manages state, grounding to product catalogs, and invoking media pipelines when the dialog requires a generated asset.

Implementation playbook (step-by-step in prose)

1) Start with a minimum viable workflow: support a single media type, a small set of templates, and one model. Measure end-to-end metrics: time-to-first-preview and cost-per-asset.

2) Add governance: implement template validation, watermarking, and retention policies.

3) Introduce observability: collect latency, GPU utilization, asset quality signals, and error rates. Automate alerts for p95 latency and failing quality checks.

4) Iterate on model strategy: offer a preview model for UX and a high-fidelity background model for final assets. Version models and run A/B tests to measure business impact.

5) Scale thoughtfully: use batch jobs for bulk campaigns, autoscale for spikes, and implement caching for repeated prompts.

Risks, regulatory signals, and future outlook

Automated media creation will attract legal and policy scrutiny around deepfakes, copyright, and deceptive content. Emerging standards for provenance, labeling synthetic media, and differential liability are likely to shape adoption. On the technical side, advances in model efficiency, on-device inference, and better alignment tools will reduce costs and make automatic media creation more ubiquitous.

Teams should keep an eye on research into adversarial robustness — AI adversarial networks research will continue to push both attack and defense capabilities — and plan governance accordingly.

Key Takeaways

- An AIOS automatic media creation platform is an orchestration-first product: models matter, but so do workflow, governance, and delivery.

- Architectural choices (event-driven vs synchronous) should reflect UX requirements and cost constraints.

- Observability must include perceptual quality signals in addition to infrastructure metrics like p95 latency and GPU utilization.

- Security, provenance, and labeling reduce legal and reputational risks in synthetic content.

- Conversational models such as Qwen in conversational AI expand use cases by enabling context-driven generation and iterative refinement.

- Plan for adversarial testing and policy controls because adversarial techniques remain a practical threat.

Building an AIOS automatic media creation system requires cross-functional coordination: engineers to design resilient pipelines, product teams to define value and ROI, and legal and trust teams to keep the system safe and compliant. When done right, the payoff is faster content cycles, better personalization, and measurable operational savings.