AI voice generation is moving from novelty demos to production systems used in customer service, accessibility, media, and robotics. This article walks through what it takes to build reliable, scalable voice-generation products: the basic concepts for non-technical readers, architecture and integration patterns for engineers, and ROI and vendor trade-offs for product teams. Along the way we highlight how multimodal components such as AI image recognition libraries and AI-driven API integrations fit into end-to-end automation systems.

Why AI voice generation matters today

Imagine a museum audio guide that speaks in a patron’s native language, or a mobile app that reads receipts aloud to visually impaired users in a natural, personalized voice. Those systems transform user experience and open operational efficiencies — content that used to require voice actors or manual recording can be produced programmatically and updated instantly. For many organizations, investment in voice automation reduces turnaround, enables personalization at scale, and improves accessibility compliance.

Beginner’s overview: how the pieces fit together

At its core, a voice generation system converts text (or other signals) into audio with natural prosody and correct pronunciation. Key components are:

- Input and content management — where scripts, captions, or dynamically generated text are prepared.

- Language and prosody control — pronunciation dictionaries, SSML tags, and voice models that shape intonation.

- Audio synthesis/renderer — the machine learning model or cloud service that outputs waveforms or encoded audio.

- Delivery — streaming or pre-rendered audio combined with caching and CDN distribution.

- Monitoring and governance — logging usage, tracking latency and errors, and enforcing security and consent.

Think of it like a bakery: content is the dough, the prosody controls are the recipe, the synthesizer is the oven, and delivery is how the bread gets to the customer — now imagine the oven can make millions of loaves per hour if it’s properly scaled.

Developer deep-dive: architecture and integration patterns

Engineers building production voice systems must balance latency, cost, and quality. Below are pragmatic architecture patterns and trade-offs.

Core architectures

- Real-time streaming: Use for conversational agents and IVR. Clients open a streaming connection and receive audio chunks with sub-second latency. This requires inference engines that can stream partial responses and infrastructure that favors low latency (GPU instances, optimized audio encoders).

- Pre-rendered batch: Suitable for long-form content like audiobooks or scheduled messages. Jobs are queued, processed asynchronously, and the resulting files are stored in object storage and behind a CDN.

- Hybrid: Combine streaming for interactive parts and batch for static content. Useful when some content is personalized and time-sensitive, and other content is stable.

Integration patterns

Voice systems rarely stand alone. Common integration patterns include:

- Synchronous REST/gRPC: Client sends text and receives audio or a streaming response. Best for low concurrency, interactive experiences.

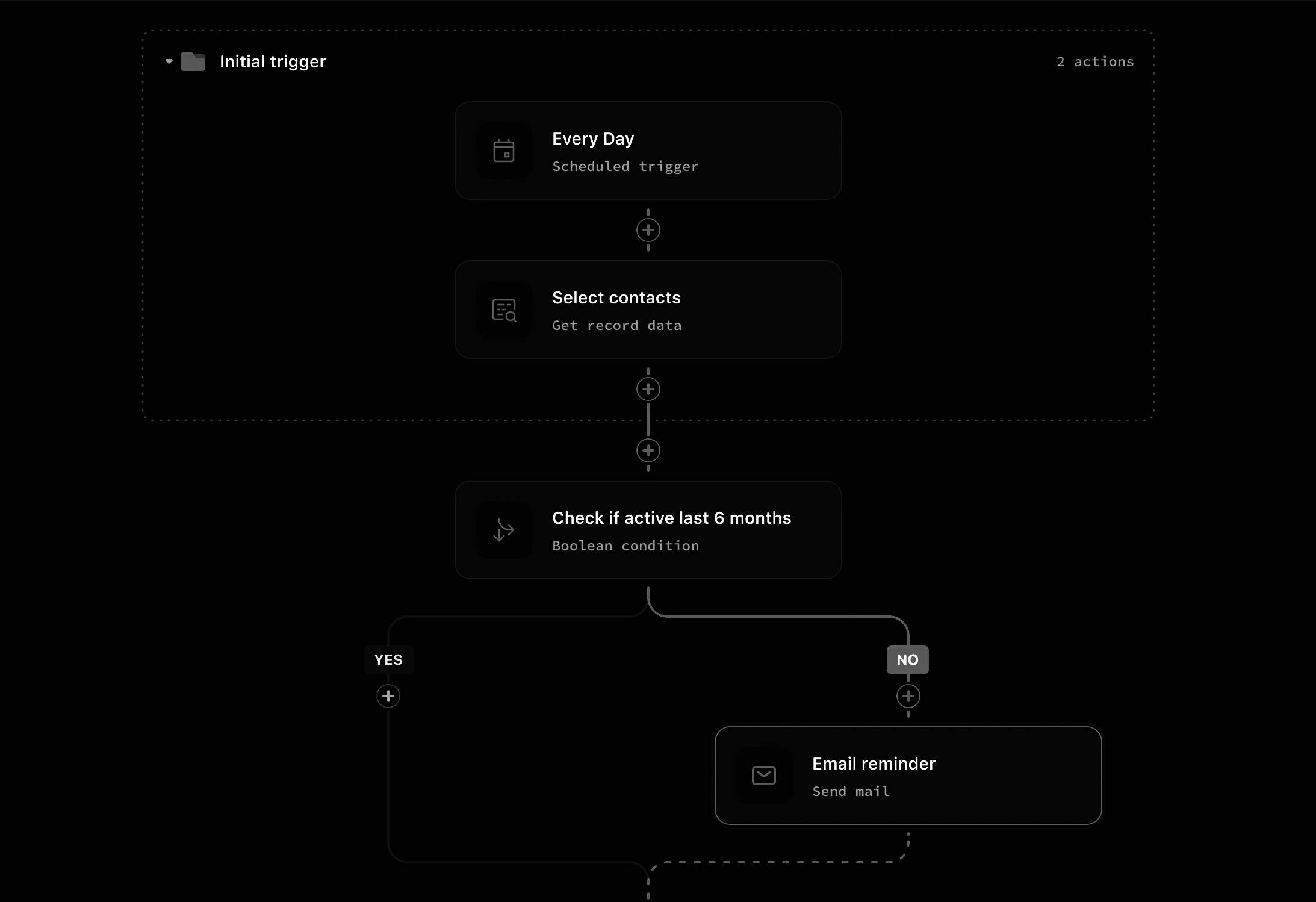

- Event-driven pipelines: Text production is emitted as events (Kafka, AWS EventBridge) and a worker pool consumes and synthesizes audio. This pattern decouples producers from consumers and supports retries and backpressure.

- Job queues + webhooks: For batch rendering, jobs are scheduled (e.g., Celery, Amazon SQS) and completion is notified via webhooks to downstream systems, enabling easy orchestration.

Multimodal and orchestration

Many real-world products combine voice with vision or text extraction. For example, reading product labels requires OCR from images, which is where AI image recognition libraries become part of the pipeline. A common flow: image ingestion → OCR via a recognition library → text normalization → voice synthesis. Orchestrating these steps can be handled by workflow engines like Airflow for batch or Step Functions for event-driven flows.

Model serving and optimization

Key decisions concern whether to use managed cloud TTS (e.g., Google Cloud Text-to-Speech, Amazon Polly, Azure Speech, or third-party providers like ElevenLabs) or self-hosted open-source models (Coqui TTS, Mozilla projects, or optimized models exported via ONNX/TensorRT). Trade-offs:

- Managed services: easier ops, predictable SLAs, but recurring costs and potential vendor lock-in.

- Self-hosted models: lower marginal cost at scale and more control, but higher engineering effort for deployment, scaling, and security.

Deployment, scaling and cost

Consider these practical signals when designing capacity and cost models:

- Latency targets: For interactive systems aim for P95 latency well under 500ms for short responses. This often requires GPU inference or model quantization and inference acceleration.

- Throughput and concurrency: Measure concurrent streams and bytes per second. Streaming audio ties up connections for longer, so calculate how many concurrent sessions your fleet must support.

- Cost per minute: Cloud TTS often charges per character or per million characters and sometimes per audio minute. Self-hosted costs include instance hours, GPU time, and storage for pre-rendered files.

- Caching strategy: Cache rendered audio for repeated content to reduce cost and load. Add a cache key based on language, voice, SSML, and text hash.

Observability, security and governance

Operational maturity requires clear signals and controls.

- Metrics to track: request rate, P50/P95/P99 latency, error rate, audio generation time, queue backlog, and cost per request. Instrument with Prometheus, visualize with Grafana.

- Tracing and logs: Distributed tracing (Jaeger/OpenTelemetry) lets you correlate text ingestion, synthesis, CDN delivery and client playback. Centralized logs help diagnose mispronunciations tied to input preprocessing.

- Security: Encrypt audio at rest and in transit, rotate API keys, and restrict model access. If you support custom voices, treat voice data as sensitive biometrics and apply stricter retention and consent policies.

- Governance: Maintain provenance metadata with each audio file (model version, voice, SSML used) and consider digital watermarking or labeling to indicate synthetic origin. Regulatory landscape around deepfakes and voice cloning is evolving; design for explicit consent and auditable logs.

Common failure modes and remediation

Operational teams frequently encounter:

- Mispronunciation — solved by phonetic hints, SSML, and improved lexicons.

- Clipped audio or encoding artifacts — monitor for bitrate mismatches and ensure consistent encoders between synthesis and player.

- Backlog oscillations — implement rate limits, autoscaling policies, and circuit breakers to avoid cascading failures.

- Copyright and license violations — validate input rights when creating voices that mimic people or produce commercial content.

Product & market considerations: ROI and vendors

Deciding between managed providers and self-hosted solutions is a business decision as much as technical. Evaluate:

- Time to market vs total cost of ownership. Managed TTS accelerates launches; self-hosting saves on per-minute costs at scale.

- Quality of output and voice options. Some vendors excel at expressive voices and multi-speaker mixing, while open-source models may require tuning for parity.

- Compliance needs. Healthcare and finance may require on-premises deployments for data residency.

- Support for integrations. Check how well a vendor supports webhooks, streaming, and SDKs — the degree of native compatibility with existing AI-driven API integrations is a practical factor.

Real-world ROI examples include:

- Contact center automation that cuts average handle time by routing simple queries to a synthetic voice assistant and reduces agent hours.

- Content producers who automate narration, cutting production costs and enabling rapid A/B testing of different voice styles.

- Enterprises improving accessibility across thousands of documents by automatically generating audio versions.

Case studies and operational lessons

Case study: a streaming media platform needed multilingual dubbing at low cost. They adopted a hybrid pattern: pre-render high-demand episodes and stream less-popular content on demand. They used a managed TTS vendor for speed and an internal cache layer to reduce cost. Outcome: 70% faster release cycle and 60% lower per-episode cost compared to manual dubbing.

Case study: an enterprise with strict data residency requirements deployed open-source models on GPU clusters and used an event-driven pipeline with Kafka and Step Functions. They invested in model inference optimization and ended up with predictable costs and complete data control but had to staff a dedicated ML Ops team.

Tooling & vendor comparisons

Popular managed vendors include Google, Amazon, Microsoft, and specialist providers like ElevenLabs and Replica. Open-source and self-hosted options include Coqui TTS, Mozilla-related projects, and trained models exported for ONNX/TensorRT. When comparing:

- Benchmarks: run your own voice quality and latency tests for the phrases you actually use.

- APIs and SDKs: prefer vendors that offer streaming, batch, and webhook patterns that match your architecture.

- Operational requirements: check SLA terms, data retention policies, and support for custom voices.

Adoption playbook: practical steps

For teams starting with voice automation, follow this playbook:

- Start with a single, high-value use case (IVR, accessibility, content automation).

- Prototype with a managed service to validate UX and measure costs.

- Define metrics: latency SLOs, cost per minute, error budget, and user satisfaction.

- Design the pipeline: choose between streaming or batch, add caching, and plan observability hooks.

- Evaluate self-hosting once you hit predictable volume that justifies the operational investment.

Future outlook

Voice models will continue to get more expressive and controllable, and ecosystems for synthetic media provenance are likely to tighten as regulation catches up. We’ll see tighter convergence with vision and language components — for example, using AI image recognition libraries to auto-generate narration from visual content, and richer orchestration frameworks that treat voice as a first-class automation primitive.

Risks to monitor

Regulation on synthetic media, risks of voice spoofing, and the ethical implications of cloning voices will shape adoption. Operationally, the main risks are unvalidated content, rising costs with scale, and the maintenance burden of custom models.

Final Thoughts

AI voice generation offers concrete business benefits but requires engineering discipline and governance. Start small, measure relentlessly, and choose the deployment pattern that matches your latency and compliance needs. Use managed services to learn quickly, and move to self-hosted models when volume, cost, and control justify the investment. Combine voice with other AI components — including AI image recognition libraries and thoughtful AI-driven API integrations — to deliver richer, more automated experiences. The technical foundations you put in place now will determine whether your voice systems scale reliably and responsibly as usage grows.