AI healthcare automation is no longer an experiment. Hospitals, clinics, and payer networks are integrating machine intelligence into scheduling, triage, imaging workflows, revenue cycle management, and clinician decision support. This article unpacks practical systems and platforms for building reliable, compliant, and cost-effective automation—aiming to help clinicians, engineers, and product leaders make pragmatic choices.

Why AI healthcare automation matters

Imagine a busy emergency department where a nurse manually triages dozens of walk-ins each hour. Now imagine a system that pulls symptom data from intake forms, cross-checks vitals and history, flags high-risk patients, and creates a prioritized worklist for clinicians. That combination of rules, models, and orchestrated tasks is AI healthcare automation: it ties models into workflows so decisions and actions happen where and when they are needed.

“Automation in healthcare isn’t about replacing clinicians; it’s about amplifying capacity so teams can focus on the highest-value cases.”

Beginner’s guide: What a practical automation system looks like

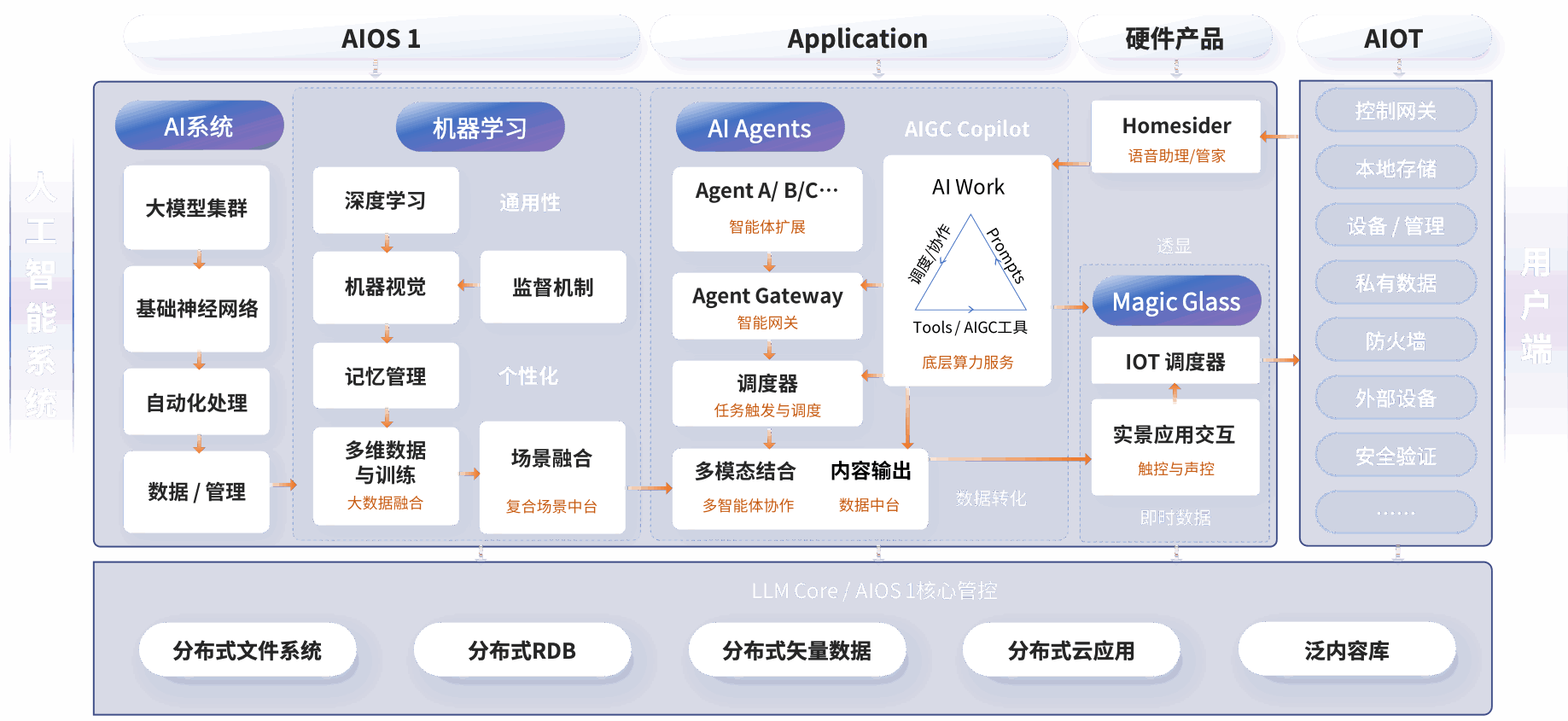

Think of an automation system as three layers:

- Data and integration layer: connects EHRs, lab systems, devices, and message buses using standards like FHIR and HL7.

- Intelligence layer: hosts models for tasks such as risk scoring, image interpretation, or natural language processing.

- Orchestration layer: runs workflows, schedules tasks, triggers downstream systems, and ensures human-in-the-loop handoffs.

Real-world example: a radiology pipeline where images enter the system, a model performs a preliminary read, suspicious studies generate alerts to physicians, and billing codes are auto-prepared. That pipeline requires reliable file transport, model inference at target latency, audit logs, and escalation rules for failures.

Architectural patterns for engineers

Event-driven vs synchronous orchestration

Synchronous calls are simple: a clinician clicks a button and a model returns a score within a strict latency budget. Event-driven designs decay complexity across asynchronous steps—useful when workflows involve multiple systems, human approvals, or long-running tasks like insurer checks.

- Synchronous pros: predictable latency, simpler error handling for single requests.

- Synchronous cons: poor for multi-step processes and fragile across network failures.

- Event-driven pros: resilient, decoupled, easier to retry and audit.

- Event-driven cons: higher operational overhead, eventual consistency challenges.

Model serving and inference platforms

Choices range from managed platforms (AWS SageMaker, Google Vertex AI, Azure ML) to open-source serving frameworks (BentoML, KServe, TorchServe). Key trade-offs:

- Managed platforms reduce ops effort and provide integrated monitoring but can be costly for large inference volumes and may present data residency challenges.

- Self-hosted solutions give control over GPU scheduling, networking, and compliance but require skilled SRE resources and robust CI/CD pipelines.

Operational signals to monitor: end-to-end latency (p50, p95, p99), throughput (requests per second), model confidence distributions, input data drift, and request failure rates. For healthcare, log retention and tamper-evident audit trails are essential.

Orchestration and agent frameworks

For complex multi-step flows, orchestration layers run founded workflows and human tasks. Options include cloud-native workflow engines (Argo Workflows, Temporal) and RPA platforms (UiPath, Automation Anywhere, Microsoft Power Automate) for GUI-level automation. Newer agent frameworks can act as coordinators, chaining multiple models and APIs into a single user intent. Design trade-offs include traceability versus agility and single-agent complexity versus composed micro-agents.

Integration and API design

APIs should follow these principles:

- Explicit contracts and versioning: preserve backward compatibility for clinical integrations.

- Idempotency and replay safety: make retry semantics clear for event-driven pipelines.

- Fine-grained observability hooks: expose request IDs, timestamps, and provenance metadata to link actions across systems.

Healthcare specifics: adopt FHIR resources for clinical data, use OAuth2 and OpenID for identity, and ensure encryption at rest and in transit. Consider API gateways for rate limiting and centralized WAF, and design for network isolation to satisfy compliance rules.

Security, governance, and regulatory considerations

AI healthcare automation must satisfy HIPAA in the U.S., and increasingly, frameworks like the EU AI Act will affect product risk classification. Key governance elements:

- Data minimization and purpose binding: avoid storing unnecessary PHI in model logs.

- Explainability and human oversight: workflows should include clear escalation paths and rationale that clinicians can review.

- FDA considerations: if a system is a clinical decision support tool, determine whether it qualifies as Software as a Medical Device (SaMD) and follow submission pathways when necessary.

- Third-party model risk management: validate vendor models on representative local data prior to deployment.

Deployment, scaling and cost models

Decisions about resource choices directly affect latency, throughput, and cost. Consider these patterns:

- Batch inference for non-urgent tasks to maximize GPU utilization and reduce per-request cost.

- Autoscaling pools for real-time inference, keeping GPU-backed instances for burst windows and CPU pools for baseline traffic.

- Edge inference for devices with strict latency or connectivity requirements; use model compression and quantization to fit on-device.

Cost signals to track: compute time per inference, data egress, storage for models and logs, and operational labor. A common pitfall is underestimating the cost of high-cardinality logging required for auditability.

Observability and failure modes

Operational monitoring must combine model-centric and system-centric views:

- System metrics: CPU/GPU utilization, latency percentiles, error rates, queue depths.

- Model metrics: drift detection, performance by cohort, calibration over time, and degradation alarms.

- Business metrics: time saved per clinician, changes in admission rates, or reductions in claim denials.

Typical failure modes include data schema changes in EHR exports, model confidence collapse after a demographic shift, or orchestration deadlocks in multi-system flows. Build runbooks and automated rollback pathways to reduce mean time to recovery.

Case study: automating ED triage

A mid-sized hospital implemented an automation pipeline to prioritize ED patients. The system combined an intake form parser, a vitals-based risk model, and a rules engine to generate a queue. Outcomes after six months:

- Average triage-to-assessment time fell from 24 to 14 minutes.

- High-risk patients were identified earlier, increasing clinician interventions by 12% for those cohorts.

- Operational ROI: one FTE nurse equivalent saved per shift, with a 9-month payback when accounting for implementation and ongoing cloud costs.

Lessons learned: clinician trust requires transparent scoring and a human override. Early pilot focused on non-critical areas reduced legal and safety risk while demonstrating value.

Vendor comparison and tooling landscape

High-level choices:

- Cloud-managed suites (AWS, GCP, Azure): fast time-to-market, integrated MLOps, and robust identity controls; higher vendor lock-in and cost.

- Enterprise RPA vendors (UiPath, Automation Anywhere, Microsoft): strong for GUI-based automation and administrative workflows like claims processing.

- Open-source MLOps (Kubeflow, MLflow, KServe, BentoML): best for custom model control; requires skilled teams and more ops investment.

- Specialized healthcare vendors and components (FHIR servers, MedPerf for benchmarking): help with domain-specific integration and comparability.

Product teams should evaluate success criteria: time-to-value, compliance posture, ability to run offline audits, and total cost of ownership. For many organizations a hybrid approach—managed model hosting with self-hosted orchestration and a strict governance layer—strikes the right balance.

Project management and organizational adoption

AI healthcare automation projects require coordination across clinical, legal, IT, and data science teams. Use AI project management software to track experiments, approvals, data lineage, and deployment gates. Practical steps:

- Start with a narrow clinical use case that has measurable outcomes and low patient risk.

- Run a time-boxed pilot with dedicated success metrics and a rollback plan.

- Document model validation, user training, and monitoring thresholds before scaling.

Safe uses and misuse risks

Not all tasks are appropriate for fully automated execution. Content generation tools, such as Grok for tweet generation used by marketing teams, are useful for non-clinical communication but must be strictly isolated from clinical knowledge bases. Avoid using generative models to craft diagnostic conclusions without clinician review. Treat any automated suggestion as advisory and keep a human-in-the-loop for clinical decisions.

Implementation playbook

Step-by-step in prose:

- Assess: inventory systems, data quality, and regulatory constraints; pick a focused pilot.

- Design: choose architecture (event-driven or synchronous), identify integration points, and define SLAs.

- Build: implement secure data pipelines, model validation tests, and end-to-end tracing.

- Pilot: run in shadow mode or limited live deployment, measure clinical and system metrics.

- Govern: establish model governance, incident management, and audit processes.

- Scale: automate CI/CD, model retraining, and capacity planning while tracking ROI and clinician feedback.

Future outlook and standards

Expect stronger regulatory clarity and more healthcare-specific platforms. Open standards like FHIR simplify data integration; projects such as MedPerf and OpenMined improve benchmarking and privacy-preserving training. The EU AI Act and ongoing FDA guidance will push vendors to provide explainability, monitoring, and risk-based documentation.

Signals to watch

- More turnkey MLOps for regulated domains combining auditability with managed inference.

- Federated learning and privacy technologies to enable cross-institution model improvement without sharing raw PHI.

- Improved tooling for clinician-facing explainability and model provenance.

Practical Advice

Start small, instrument everything, and prioritize clinician trust. Use specialized project management tools to keep cross-functional work visible, and avoid over-optimizing for full automation initially—opt instead for augmentation with clear escalation. Keep compliance and monitoring baked into the architecture from day one; retrofitting these controls is expensive.

Finally, remember: automation is an organizational change project as much as a technical one. The most successful deployments invest as much in workflows and training as they do in models and infrastructure.