Organizations are under pressure to automate more, faster, and with measurable business impact. This article walks through what an AI predictive operating system looks like in practice: the concepts, architecture, integration patterns, platform choices, deployment trade-offs, and operational practices you need to make predictive automation dependable and valuable.

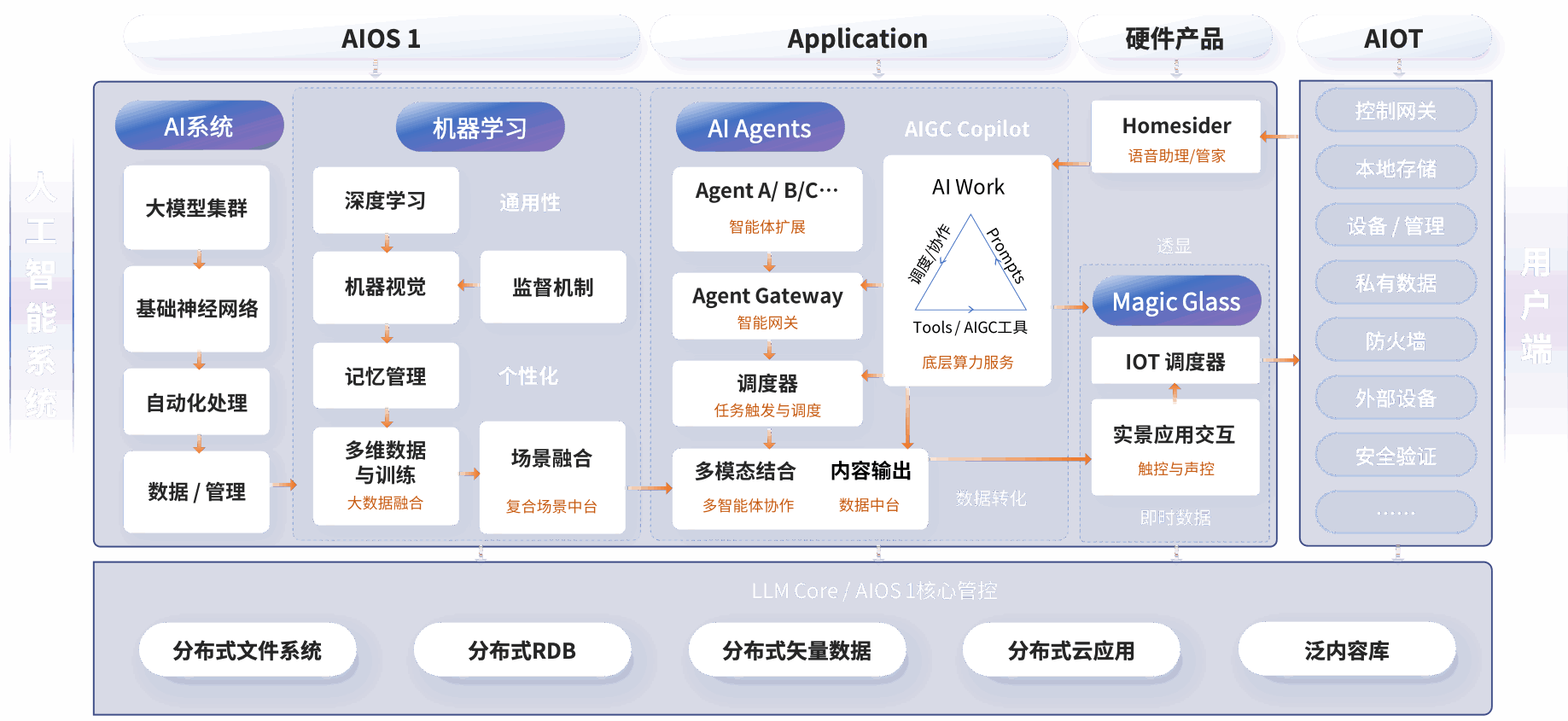

What is an AI predictive operating system?

An AI predictive operating system is a software layer that combines predictive models, data pipelines, orchestration, and policy controls to run automated decisions and workflows across an enterprise. Think of it as an operating system for business automation: instead of managing CPU or memory, it manages signals (events, user intent, telemetry), predictions (risk scores, lead propensity, demand forecasts), and actions (notifications, provisioned capacity, service calls).

For a beginner: imagine a smart assistant that watches your sales pipeline, predicts which deals will close this quarter, triggers follow-ups automatically, and makes sure cloud services are right-sized before demand spikes. That assistant is the promise of an AI predictive operating system.

Why this matters now

- Cost pressure pushes teams to automate repetitive decisions and reduce manual review cycles.

- Higher expectations for responsiveness make predictive actions (notify before a failure) more valuable than reactive ones.

- New tools and standards—model serving frameworks, event buses, and policy engines—make practical deployments easier than five years ago.

Core architecture: components and patterns

The architecture centers on four core planes: data, model runtime, orchestration, and control plane. Each has distinct responsibilities and integration patterns.

Data and feature plane

This plane ingests events, enriches them, and stores features for real-time inference and model training. Key technologies: streaming (Kafka, Pulsar), feature stores (Feast), and data lakes or warehouses (Snowflake, BigQuery).

Model runtime plane

Models are exposed via a runtime that supports low-latency inference, batching, and versioning. Platforms include Triton, TorchServe, Seldon Core, and managed services from cloud providers. A good runtime supports A/B rollouts, canarying, and interpretable outputs (explanations, confidence intervals).

Orchestration plane

Orchestration coordinates actions: trigger model evaluation on events, chain microservices, or execute long-running processes. Patterns range from synchronous REST calls to event-driven workflows. Tools: Temporal, Airflow, Argo Workflows, and lightweight agent frameworks like Ray Serve when tasks are compute-heavy.

Control plane

The control plane defines policies, access controls, operational guardrails, and observability hooks. It houses model governance (model cards, lineage) and runtime policies (rate limits, kill switches). OpenPolicyAgent (OPA) is commonly used for policy enforcement.

Integration patterns: where automation touches systems

Practical deployments combine several integration styles. Here are common patterns and when to use each.

- Event-driven automation: Use for high-throughput asynchronous tasks (webhook events, telemetry). It decouples producers and consumers and scales naturally.

- Synchronous API orchestration: Use when an immediate decision is required in a user flow (loan approval UI, checkout). Keep latency budgets tight and fall back to cached or safe defaults when inference fails.

- Declarative workflow definitions: Define intent and let the platform reconcile state (useful for recovery and long-running processes).

- Edge or on-device inference: For latency-sensitive actions, push models closer to the user or device; manage updates and model size trade-offs carefully.

Developer guidance: API design and implementation trade-offs

Design APIs around intent and contracts, not model internals. Expose high-level endpoints like /predict-propensity or /request-provisioning that return structured predictions and action recommendations. Avoid leaking raw feature vectors in public APIs.

Key trade-offs:

- Batching vs per-request latency: Batching improves throughput and cost per prediction but increases tail latency. Design hybrid approaches that batch low-priority work and keep a fast path for real-time decisions.

- Monolithic agents vs modular pipelines: Monoliths are easier to deploy initially; modular pipelines are easier to test, reason about, and scale independently.

- Managed vs self-hosted platforms: Managed services reduce operational burden but can be costlier and limit compliance controls. Self-hosting provides control and potentially lower long-term cost but requires mature DevOps and SRE practices.

Deployment, scaling, and the role of predictive auto-scaling

Scaling predictive systems is not just horizontal scaling of inference instances. It requires capacity planning for data ingestion, model retraining, and orchestration throughput.

Reactive autoscaling (like Kubernetes HPA) adjusts infrastructure after traffic changes. An alternative is predictive scaling: use demand forecasts produced by the platform to provision capacity ahead of time. When applied properly, AI-based system auto-scaling reduces cold starts and SLA breaches. However, predictive scaling introduces its own failure mode: incorrect forecasts can lead to over-provisioning or resource shortages. Combine predictive scaling with reactive fallbacks and short-lived burst capacity for robustness.

Observability and SLOs for automation

Operational visibility is critical. Monitor these signals continuously:

- Prediction metrics: latency percentiles, throughput, error rate, confidence distribution.

- Business metrics: conversion lift, false positive/negative rates tied to business outcomes.

- Model health: data drift, feature drift, PAUC or ROC-AUC, prediction distribution changes.

- System health: queue lengths, retry rates, resource utilization, cold-start frequency.

Use OpenTelemetry, Prometheus, and Grafana for telemetry; log model inputs and outputs with careful privacy controls to enable debugging and retraining. Define SLOs for both technical (99th percentile latency) and business metrics (e.g., maintain forecast error within X%).

Security, privacy, and governance

Security is non-negotiable when automation affects customer outcomes. Best practices include:

- Role-based access control and least privilege for model deployment and data access.

- End-to-end encryption for data-in-transit and at-rest, and secure secret management for credentials.

- Provenance and lineage to trace how predictions were derived; store model versions, feature transformations, and training data hashes.

- Explainability and human-in-the-loop controls for high-risk decisions; maintain model cards and deploy scoring thresholds with manual review gates where needed.

Regulatory considerations such as the EU AI Act and data protection laws require documentation of risk assessments and human oversight for certain use cases. Architect your control plane to generate the artifacts auditors will ask for.

Product perspective: ROI, vendor choices, and business cases

Decision-makers should focus on measurable impact. A common, high-return use case is AI predictive sales analytics: score leads by conversion likelihood, automate outreach sequencing, and prioritize salesperson time. Atypical SaaS company that implemented a predictive pipeline reduced time-to-close by 20% and increased win rate by 12%, yielding a payback period under six months for the automation platform investment.

Vendor comparison highlights:

- Cloud managed stacks (AWS SageMaker, Google Vertex AI, Azure ML): Fast time-to-value, integrated MLOps, but sometimes opaque cost structure and limited customization.

- Open-source and self-hosted (Kubeflow, Seldon Core, BentoML, Ray, Feast): Greater flexibility and cost control, higher operational overhead, and better for data-sensitive workloads.

- Workflow/orchestration specialists (Temporal, Argo, Airflow): Strong for complex business processes and human-in-the-loop flows; integrate with model runtimes for end-to-end automation.

For business leaders, the right approach often combines managed model training and experimentation with self-hosted inference or edge deployment to reconcile speed and compliance requirements.

Case study: Predictive operations for an e-commerce platform

A mid-size e-commerce operator used an AI predictive operating system to reduce order cancellations and avoid stockouts. The system combined demand forecasts with inventory orchestration and supplier notifications. The architecture used a streaming ingestion layer, a feature store, and a model serving tier with low-latency predictions. Predictive scaling heuristics were applied to the checkout flow to prevent timeouts during promotions.

Results after six months: a 7% reduction in stockouts, a 15% improvement in on-time fulfillment, and clear cost savings via smarter procurement. Operational lessons: the team invested early in data quality checks and monitoring, which prevented a common failure mode—models trained on stale or incomplete inventory data.

Implementation playbook: step-by-step in prose

- Start small with one high-value use case (e.g., lead scoring or demand forecasting). Define measurable KPIs and SLOs.

- Build a reliable data pipeline and feature store. Ensure lineage from raw events to features used in production.

- Prototype models and expose them through a model runtime that supports versioning and canary rollouts.

- Implement orchestration that ties predictions to actions, starting with non-critical automations before moving to higher-risk decisions.

- Introduce observability and governance—logging, explainability, audit trails—before scaling the platform across teams.

- Iterate on predictive scaling policies and fallbacks to optimize cost and reliability. Combine forecasts with short-term reactive capacity to handle surprises.

Common pitfalls and failure modes

Watch out for:

- Feedback loops where automation changes the distribution of data, invalidating models.

- Over-optimization on proxy metrics rather than business outcomes.

- Under-investment in retraining and data pipelines—most failures come from stale models and broken feature extraction.

- Ignoring error budgets and SLOs, which leads to brittle automation that harms customer experience.

Looking Ahead

Expect the space to evolve along three axes: better integration between model governance and orchestration, stronger standards for auditability, and more mature predictive scaling techniques. Open-source projects like Ray, Feast, and OpenPolicyAgent and managed offerings from cloud providers will continue to drive capability parity, making it practical for more organizations to adopt an AI predictive operating system.

Adopting this architecture is not about replacing human judgment but amplifying it: reduce routine toil, surface high-value decisions, and provide clear evidence for automated actions. With careful design, observability, and governance, predictive automation can deliver measurable ROI while keeping risks under control.

Next Steps

If you are starting out: pick a clear business KPI, instrument the data pipeline and SLOs, and prototype with one predictive model. If you are scaling: invest in feature quality, governance artifacts, and a hybrid approach for predictive and reactive scaling to keep costs and availability balanced.