Why an AI operating system matters for the grid

Imagine the electrical grid as an orchestra: generators, substations, meters, and market signals all need to play in sync. Traditionally, grid operations have used manual playbooks, SCADA alarms, and siloed analytics. An AIOS-based smart grid replaces the manual conductor with an operating layer that coordinates data, models, and automated actions across edge devices and cloud services. For everyday readers, that means fewer blackout hours, faster outage recovery, and better integration of renewables. For operators and engineers, it means embedding automation where latency, safety, and compliance matter.

Core concept explained simply

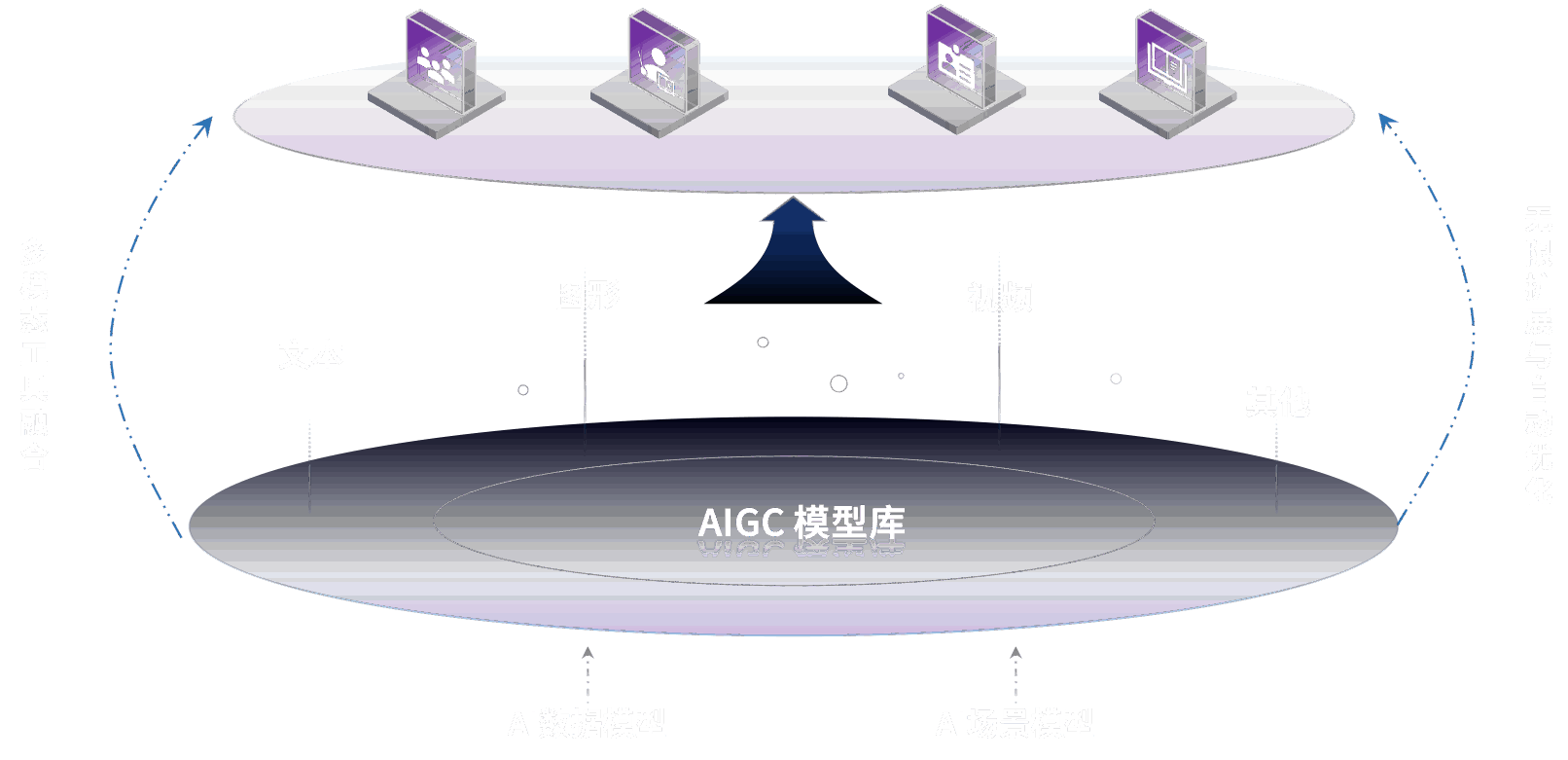

The phrase AIOS-based smart grid combines two ideas: an AI Operating System (AIOS) and smart grid control. An AIOS is not a single product: it is a software layer that manages models, data pipelines, policies, and agents. In a smart grid context, AIOS handles tasks such as congestion prediction, demand response orchestration, fault detection, and automated dispatch. Think of it as the middleware that translates telemetry into decisions while enforcing safety and regulatory constraints.

Short scenario: A neighborhood detects irregular voltage swings. Edge sensors send events to the AIOS. The system runs a fast fault classifier, isolates the section, reconfigures switches, and alerts crews — all before many customers notice.

Architecture: layers, flow, and trade-offs

At a high level an AIOS-based smart grid architecture has three planes: the data plane, the model/control plane, and the policy/ops plane.

Data plane

This handles telemetry ingestion, stream processing, and long-term storage. Typical components include message buses (Kafka, Apache Pulsar), stream processors (Flink, Spark Structured Streaming), and time-series stores (InfluxDB, Timescale). Edge gateways aggregate telemetry from protocols such as IEC 61850 and DNP3 and normalize payloads. Key trade-offs here are consistency vs latency: batching improves throughput but increases tail latency during emergencies.

Model / control plane

Model serving and agent orchestration live here. Model servers (NVIDIA Triton, KServe) provide low-latency inference; orchestration frameworks (Argo, Ray, Kubeflow) manage pipelines and retraining. For interactive operator use, language interfaces powered by NVIDIA AI language models can provide natural queries and runbooks — useful for incident triage and decision support. Decide early whether inference runs at the edge or in cloud: edge placement reduces round-trip time but increases deployment complexity and hardware costs.

Policy and governance plane

This includes authorization, compliance, audit trails, and safety constraints. Gatekeeping modules enforce rules like maximum allowed set-point changes, required human approvals, and rollback procedures. Integration with identity providers, fine-grained RBAC, and immutable audit logs is non-negotiable in regulated environments.

Integration patterns and API design

Successful deployments avoid tightly coupled, synchronous integrations between model outputs and control endpoints. Common patterns:

- Event-driven automation: models subscribe to telemetry topics and emit recommended actions as events. An execution broker applies policies before sending commands.

- Command-approval pipeline: high-risk actions require human-in-the-loop approval; lower-risk actions are automated with rollback hooks.

- Sidecar inference for edge devices: a lightweight model runs locally while a stronger cloud model provides periodic validation and retraining signals.

API design decisions should prioritize idempotency, versioning, and observability. Use correlation IDs for each event, include schema validation at ingestion boundaries, and expose clear health and readiness endpoints for your model services.

Deployment and scaling considerations

Scale challenges in an AIOS-based smart grid are multi-dimensional: number of edge devices, model inference QPS, and retraining throughput. Practical considerations:

- Hybrid deployment: run critical, latency-sensitive inference on local hardware (NVIDIA EGX or Jetson), with cloud GPUs for batch retraining and heavy models.

- Autoscaling policies must respect safety windows: during storms you may want slower scaling to avoid oscillations in automated control.

- Cost models: cloud inference costs are often billed per request or GPU-hour; factor in data egress and storage for telemetry. A common approach is a mixed pricing model: edge-capacity fixed cost plus cloud burst capacity for large-scale analytics.

Observability, monitoring, and failure signals

Observability is central to trust. Track three classes of signals:

- Infrastructure metrics: CPU/GPU utilization, memory, network throughput, disk I/O.

- Application metrics: model latency distributions (P50, P95, P99), inference QPS, success/failure rates, and queue lengths for event buses.

- Domain signals: control action rate, safety constraint violations, frequency deviations, and customer-impact metrics such as SAIDI/SAIFI.

Tooling choices include Prometheus/Grafana for metrics, OpenTelemetry for tracing, and ELK or Splunk for logs. Define clear SLOs for automated actions and alert on both infrastructure and domain-level anomalies.

Security and governance

Regulatory regimes such as NERC CIP (US), ENTSO-E (EU), and local grid codes impose strict requirements. Best practices:

- Zero trust networking between edge, cloud, and operators. Encrypt telemetry in transit and at rest.

- Model governance: catalog models, track lineage, version datasets, and require approvals for deploys into production decision paths.

- Explainability and auditability: ensure automated decisions can be traced back to inputs and model outputs, and provide human-readable rationales where possible.

- Adversarial resilience: monitor for distribution shift and anomalous inputs that could trigger unsafe actions.

AI operations in practice: practical playbook

Step-by-step guidance for adoption without code snippets:

- Start with a high-value pilot: choose a bounded domain such as feeder-level fault detection or demand response orchestration.

- Define success metrics up front: e.g., reduction in outage MTTR, percentage improvement in peak shaving, or operator time saved.

- Build lightweight data contracts and ingest a representative dataset from edge devices for model training and validation.

- Deploy a model in shadow mode: let it run in parallel and log decisions without actuating hardware. Compare outputs to operator actions.

- Introduce controlled automation with guardrails: short-duration, reversible commands with human approval for escalations.

- Scale gradually, adding observability, SLOs, and formal governance as automation covers more decision space.

Case studies and vendor comparisons

Several industrial vendors and open-source projects intersect with AIOS use cases. Siemens, Schneider Electric, and GE offer end-to-end grid automation suites with strong OT integration and domain expertise. Cloud vendors (AWS, Azure, Google Cloud) provide managed streaming, model serving, and digital twins — useful when you need fast time-to-market. Open-source building blocks like Apache Kafka, Kubernetes, Apache Flink, and Ray allow more custom control but require in-house SRE and security resources.

For model serving and inference, options include NVIDIA Triton for optimized GPU inference and KServe for Kubernetes-native serving. For operator interfaces and assistant-style queries, teams may evaluate NVIDIA AI language models alongside other LLM providers, considering latency and data residency. If your workload includes routine document work or meter ingestion, integrating AI data entry automation can reduce clerical labor and improve data quality — but must be folded into validation workflows to avoid garbage-in/garbage-out.

ROI, impact, and common operational challenges

ROI often shows up as reduced outage durations, lower staffing costs due to automation, and deferred capital expense through improved asset utilization. Typical ranges reported by utilities after automation pilots include 5-20% operational cost reductions and measurable improvements in outage response times. That said, expected benefits require continuous investment in data quality, monitoring, and governance.

Common pitfalls:

- Underestimating dataset drift: models degrade if retraining is not operationalized.

- Over-automation without safety constraints: automated actions can amplify failures if inputs are noisy.

- Integration debt: OT protocols, legacy SCADA systems, and vendor-specific formats slow down rollouts.

- Governance gaps: insufficient audit logs or unclear ownership leads to slow recovery when incidents occur.

Standards, policy and safety considerations

Adopting an AIOS-based smart grid must align with standards like IEC 61850 for substation automation, NERC CIP for cyber security, and emerging AI governance principles (transparency, fairness, accountability). Regulators are paying more attention to automated decision systems that affect critical infrastructure; maintain clear documentation of model behavior and retention policies to ease audits.

Future outlook

Expect three converging trends: better edge inference hardware, tighter hybrid orchestration tools, and more mature model governance. Advances in specialized inference stacks will reduce latency and cost for local decision-making. Orchestration frameworks that treat models as first-class entities will simplify lifecycle management. Meanwhile, persistent concerns — adversarial inputs, regulatory scrutiny, and integration complexity — will keep governance a central pillar of any deployment.

Key Takeaways

An AIOS-based smart grid is a practical way to automate and scale decision-making across the power system, but success hinges on architecture and operations as much as on models. Start small, use event-driven patterns, enforce strict governance, and make observability and safety first-class. Evaluate vendors and open-source components by how well they integrate with OT, support edge inference, and provide auditable model lifecycles. Where natural language assistance is helpful, consider tools like NVIDIA AI language models for operator interfaces — but measure latency and data privacy impacts before production use. Finally, fold routine tasks such as meter ingestion into AI data entry automation carefully, with validation, to realize reliable operational gains.