Artificial Intelligence (AI) continues to transform industries across the globe, bringing both immense opportunities and significant challenges. In recent months, several key developments have emerged in the realm of AGI (Artificial General Intelligence) research, advancements in AI technologies aimed at enhancing patient safety, and an ongoing dialogue surrounding the fairness of AI systems. This article delves into these critical areas, shedding light on the state of AI as we enter a new era of innovation.

The concept of AGI has captivated researchers, policymakers, and technologists for years. Unlike narrow AI, which is designed to solve specific problems, AGI aims to perform any cognitive task that a human being can. Recent trends in AGI research indicate a shift towards collaborative intelligence, where human and AI systems work together to solve complex issues. According to a report published by the Future of Humanity Institute, researchers are increasingly focusing on aligning AGI objectives with human values to mitigate potential risks. This involves developing models that not only understand human goals but also consider the ethical implications of their actions.

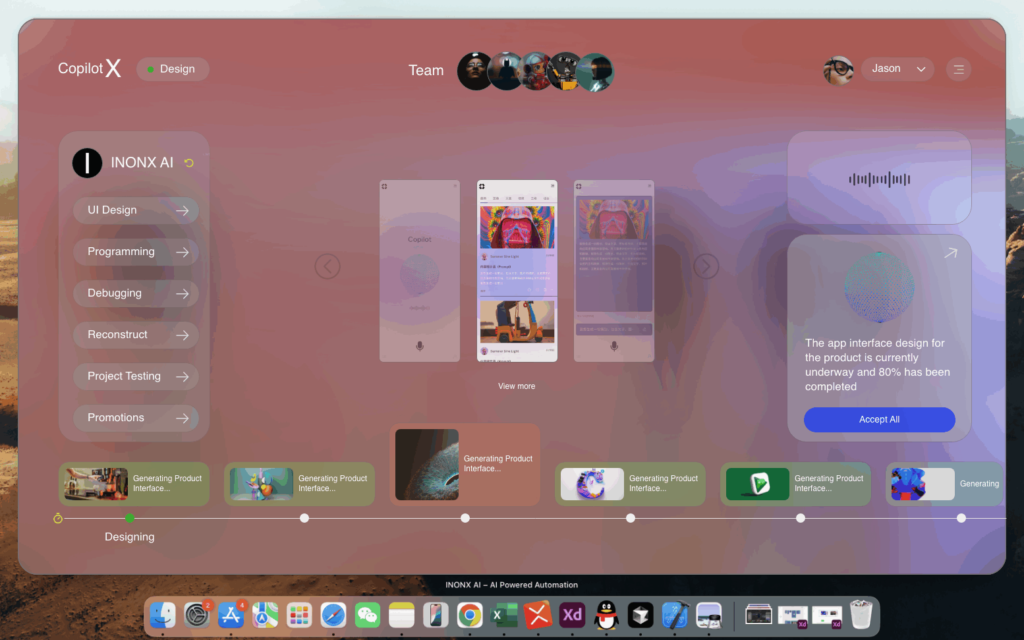

.AGIs are often designed with reinforcement learning algorithms that enable them to learn from their environment through trial and error. However, there is growing concern about the implications of this approach, particularly in high-stakes scenarios. The recent collaboration between tech companies and academic institutions has led to the establishment of multi-disciplinary research teams dedicated to exploring safer AI designs. One prominent example is OpenAI’s research initiatives, which emphasize creating AGIs that can work in tandem with humans to enhance decision-making processes rather than replacing them.

.In addition to AGI developments, the integration of AI in healthcare has seen significant advancements, particularly concerning patient safety. A recent report from the Institute of Medicine highlights how AI can improve patient outcomes by reducing medical errors and enhancing diagnostic accuracy. AI tools leveraging machine learning and natural language processing help healthcare professionals analyze large datasets, identify patterns, and make informed decisions swiftly. A notable instance is the implementation of AI algorithms in predicting patient deterioration in hospital settings. According to research published in JAMA Internal Medicine, hospitals utilizing AI-powered predictive analytics saw a 30% reduction in adverse events compared to those relying solely on traditional methods.

.However, while the promise of AI in healthcare is vast, ethical considerations surrounding patient data privacy remain a pressing issue. The emergence of stringent regulations, such as the Health Insurance Portability and Accountability Act (HIPAA), must be addressed in AI applications. Moreover, companies like Google Health are prioritizing transparency in their AI systems by engaging in collaborations with healthcare professionals to ensure the responsible use of AI technologies. Such partnerships promote a culture of accountability, assisting in the development of AI applications that prioritize patient safety while respecting individual privacy rights.

.Fairness in AI is an increasingly critical discourse, particularly as AI technologies become integrated into decision-making processes across various sectors, including finance, criminal justice, and human resources. The potential for algorithmic bias to perpetuate existing inequalities has garnered attention from researchers, advocates, and policymakers. According to a report by the AI Now Institute, a growing number of organizations are advocating for the establishment of regulatory frameworks aimed at ensuring fairness and accountability in AI systems. These frameworks would encompass guidelines on data sourcing, algorithmic transparency, and regular audits for potential biases in AI decision-making.

.One significant step towards addressing fairness in AI is the adoption of fairness-aware machine learning techniques. These methods seek to identify and mitigate bias at various stages of the AI development process, from data collection to algorithm design. Researchers at Stanford University are leading the charge by developing toolkits to help practitioners assess their models for fairness and provide actionable insights to correct biases. The democratic nature of these toolkits allows for community involvement, ensuring that diverse perspectives are considered in the development of fair AI systems.

.A recent case study illuminates the impact of biased AI systems in the justice system. A report by ProPublica revealed that an AI-based risk assessment tool used in certain courts demonstrated racial bias, disproportionately affecting minority communities. This revelation prompted a widespread call for reevaluation of AI tools used in high-stakes decision-making, sparking dialogue on the need for fairness in AI application. In response, various organizations have launched initiatives to promote the responsible use of AI while also pushing for legislative measures that scrutinize the implications of deploying such technologies without rigorous testing for bias.

.As AI technologies continue to advance, the interconnection between AGI research trends, AI for patient safety, and fairness in AI underscores the complexity of the field. The potential benefits of AI are immense, offering the promise of enhanced decision-making, improved patient outcomes, and optimized resource allocation. However, with these advancements come the responsibility of researchers, developers, and policymakers to ensure that AI systems are designed and deployed ethically.

.Following the recent developments, industry stakeholders highlight the necessity of collaborative strategies to address these challenges proactively. Educational initiatives that promote interdisciplinary knowledge sharing among technologists and ethicists are crucial in shaping responsible AI practices. Organizations such as the Partnership on AI are actively engaging various stakeholders, fostering conversations that drive awareness of both the capabilities and limitations of AI technologies.

.In conclusion, the landscape of AI is rapidly evolving, presenting opportunities and challenges that require careful consideration. AGI research trends indicate promising directions towards collaborative intelligence, while AI advancements in healthcare are revolutionizing patient safety practices. However, persistent issues surrounding fairness in AI remain a critical challenge as systems increasingly influence decision-making across various societal domains. Prioritizing responsible research, ethical considerations, and accountability measures will be essential as we navigate this complex terrain in pursuit of a future where AI serves humanity equitably and justly.

Sources:

1. Future of Humanity Institute: AGI safety and alignment research trends.

2. Institute of Medicine: The impact of AI on patient safety.

3. JAMA Internal Medicine: AI in the prediction of adverse patient outcomes.

4. AI Now Institute: Regulatory frameworks for fairness in AI.

5. Stanford University: Fairness-aware machine learning guidelines.

6. ProPublica: Analysis of biased AI systems in the criminal justice domain.

7. Partnership on AI: Initiatives for responsible AI development.