AI is no longer an experiment. Teams across industries are embedding models into workflows to automate decisions, create content, and detect threats. Central to modern automation is the AI API — the contract between your systems and model-based intelligence. This article is a practical guide that walks beginners through the ideas, gives engineers architecture and operational advice, and helps product leaders evaluate ROI, vendors, and risks when building AI-driven automation systems.

What an AI API Really Means (Beginner Friendly)

Think of an AI API as a service endpoint that accepts inputs (text, images, events) and returns intelligent outputs (predictions, summaries, actions). It’s like calling a digital consultant inside your application. For a customer support team, for example, an AI API can take a support ticket and return suggested reply drafts, priority labels, and recommended next steps. That single interaction replaces manual triage steps, scales support volume, and reduces response time.

Why it matters in everyday scenarios

- Customer service: faster first responses and suggested resolutions.

- Marketing: generate personalized copy variants at scale.

- Operations: extract structured data from invoices to feed ERPs.

- Security: detect anomalous log lines or phishing attempts in real time.

Analogy: An AI API is like adding a specialist to every team — always available, fast, but requiring supervision and clear interfaces.

Architectural Patterns for Engineers

Designing robust automation around an AI API requires treating it like any other critical service: understand latency, throughput, failure modes, and security boundaries. Below are common architecture patterns and where they fit.

Synchronous calls for low-latency experiences

Use synchronous requests when you need immediate results: chat assistants, inline suggestions, or fraud checks during checkout. Optimize here for minimal network latency and model inference time. Techniques include model caching, response streaming, smaller specialized models for short calls, and colocating model inference with frontend services when feasible.

Asynchronous/event-driven for scale and resilience

Background tasks like batch classification, data enrichment, or long-running multi-step automations are better served by event-driven pipelines. Publish events (user upload, new record) to a messaging system and let worker pools consume and call the AI API. This pattern improves throughput and isolates spikes from user-facing latency.

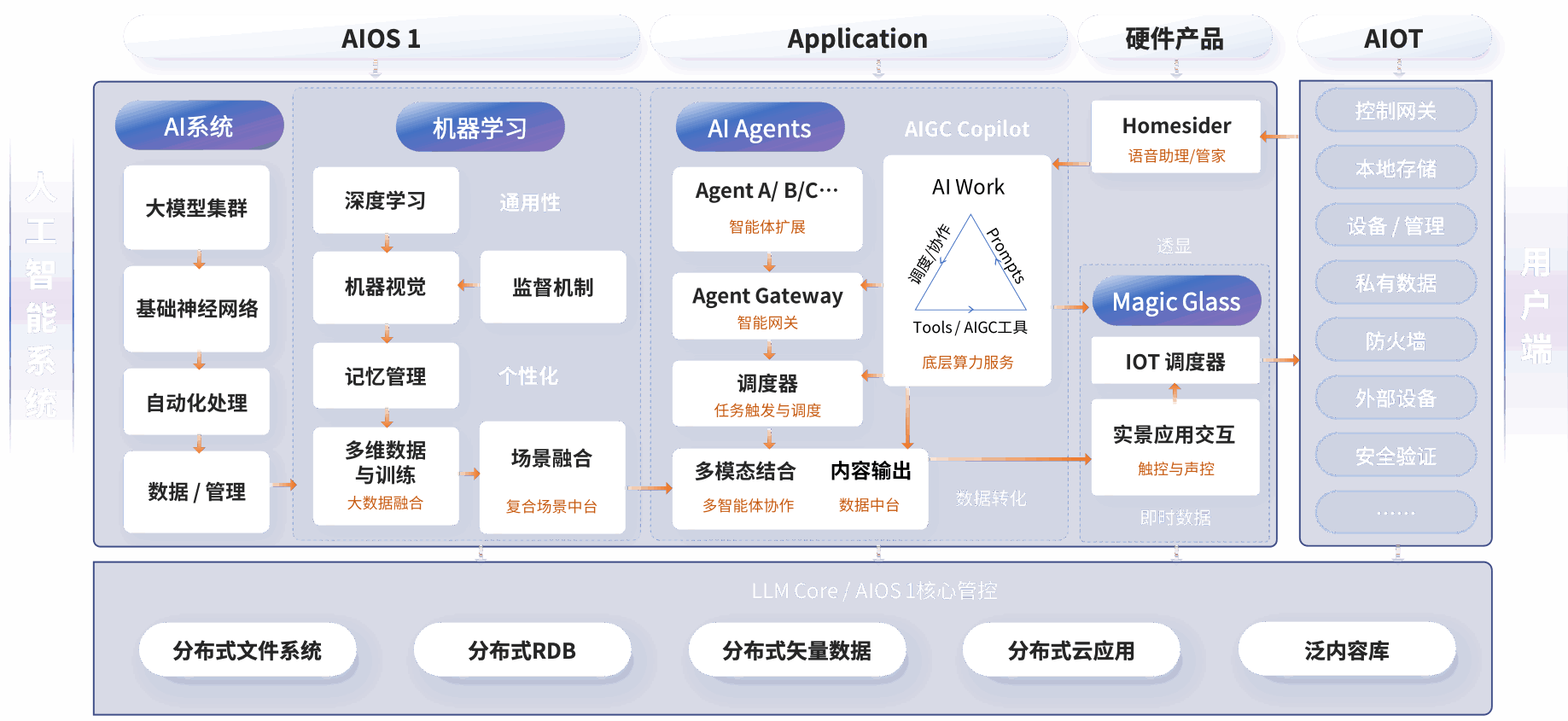

Agent and orchestration layers

Complex automations often combine multiple AI API calls, external system actions, and conditional logic. Use an orchestration layer (Temporal, Argo Workflows, or a purpose-built agent framework) to model these flows. This provides retries, state management, and visibility for long-running jobs.

Integration Patterns and API Design

When integrating an AI API, consider interface design, versioning, and extensibility. The wrong contract can create technical debt quickly.

- Coarse vs fine-grained endpoints: Coarse endpoints return rich, aggregated outputs and reduce chatty interactions; fine-grained endpoints provide modularity but increase network overhead. Choose based on latency budgets and reuse patterns.

- Idempotency and deduplication: Ensure request IDs and idempotency keys for critical operations so retries don’t cause duplicate side-effects.

- Versioning: Expose versioned endpoints and maintain backward compatibility. Use semantic versioning for behaviour changes and hidden flags for gradual rollout.

- Payload contracts: Define strict schema validation for inputs and outputs. This reduces unexpected failures when model outputs drift and simplifies downstream processing.

Deployment, Scaling, and Cost Trade-offs

There’s a balance between managed AI APIs (cloud-hosted model providers) and self-hosted model serving. Each choice affects latency, control, cost, and operational burden.

Managed provider pros and cons

Providers like OpenAI, Anthropic, and Hugging Face’s hosted endpoints offer fast time-to-value, model improvements, and SLA-backed endpoints. They remove operational tasks like model upgrades and inference optimization. The trade-offs are cost per request, less control over data residency, and potential vendor lock-in.

Self-hosting pros and cons

Running models using frameworks like Triton, Seldon, or on Kubernetes gives full control over costs, latency (via optimized hardware), and privacy. However, it requires expertise in model serving, scaling GPUs/accelerators, and ongoing performance tuning.

Hybrid approaches

Many teams use hybrid strategies: providers for edge cases and experimentation, self-hosting for predictable high-volume workloads. Architect with abstraction layers so you can switch endpoints without changing business logic.

Observability and Operational Metrics

Monitoring is essential. Build dashboards and alerts around signals that reflect both service health and model fidelity.

- Latency percentiles (P50, P95, P99) for user-facing paths.

- Throughput (requests per second) and concurrency limits.

- Success and error rates, with error categorization (auth, rate limit, model failure).

- Cost per 1k requests and cost attribution by feature or customer.

- Model-specific metrics: confidence distributions, label drift, and hallucination frequency.

- Business KPIs: funnel impact, time saved per task, and false positive/negative rates in classification use cases.

Tools: instrument with OpenTelemetry for traces, Prometheus/Grafana for metrics, and a logging/analytics pipeline that retains payload hashes (not raw personal data) for auditing.

Security, Governance, and AI-driven Cybersecurity

Security is both a use case and a risk. Using an AI API feeds new vectors: input injection, data leakage, and model manipulation. At the same time, AI APIs enable advanced security—automated threat detection, malware triage, and anomaly hunting.

Practical defenses

- Authentication and authorization — use mTLS, OAuth, and per-service tokens with minimal scopes.

- Input validation and sanitization to prevent prompt injection or command-like inputs.

- Data controls — avoid sending PII to third-party endpoints. Use data minimization, tokenization, or local anonymization before calling external APIs.

- Rate limits and circuit breakers to guard against abuse and runaway costs.

- Audit trails that record who called what, with hashes of inputs/outputs for forensic inspection.

Using models for cybersecurity

AI-driven cybersecurity systems can process telemetries, classify threat patterns, and propose enrichment actions faster than humans. But tuning models for adversarial resilience is crucial: attackers can craft inputs to evade detection or poison training data. Combine ML signals with deterministic rules, threat intelligence feeds, and human-in-the-loop reviews.

Implementation Playbook: From Idea to Production

This step-by-step plan helps teams go from concept to live automation without common pitfalls.

- Define the outcome: Start with the business problem and success metrics—e.g., reduce average ticket resolution time by 30%.

- Prototype with a managed AI API: Use a hosted endpoint for rapid iteration. Focus on the integration contract and UX rather than perfecting model tuning.

- Instrument observability from day one: Log inputs, outputs, and business impact. Track latency and error budgets.

- Introduce an orchestration layer: For workflows that require retries, long-running tasks, or state, deploy a workflow engine early to avoid fragile ad-hoc logic.

- Plan data governance: Map data flows and decide what stays in-house vs. what goes to external providers. Build a masking/anonymization step if needed.

- Scale and optimize: Move high-volume, predictable workloads to self-hosted inference if cost-effective. Implement batching, model distillation, or quantization to reduce compute cost.

- Operationalize security: Put rate limits, anomaly detection, and incident response playbooks in place. Test prompt injection and adversarial scenarios.

- Measure ROI and refine: Use A/B tests, guardrails, and feedback loops. Monitor model drift and retrain or switch models when necessary.

Vendor Comparison and Market Signals

Vendors and open-source options span a spectrum. Consider:

- Large cloud providers and model-as-a-service: Strong SLAs, rapid updates, and ecosystem integration—best for fast experiments and low operational overhead.

- Specialized providers: Offer domain-tuned models (finance, healthcare) and compliance features.

- Open-source & community projects: Hugging Face models, LangChain for orchestration patterns, and Temporal for durable workflows—excellent for customization but require ops resources.

Recent trends include the rise of agent frameworks, more modular model calls, and standardized interfaces for tool use by models. Watch for new interoperability standards and regulatory guidance around model transparency and data use.

Case Study Snapshot

A mid-size e-commerce company used an AI API to automate product categorization and customer returns triage. They started with a hosted API to validate the impact and saw a 40% reduction in manual classification time. After six months, high-volume classification moved to a self-hosted model serving cluster, reducing per-request costs by 60% while maintaining latency SLAs. Key lessons: start managed, instrument business metrics early, and abstract the inference layer so swapping providers was straightforward.

Common Pitfalls and How to Avoid Them

- No observability: You can’t improve what you don’t measure. Instrument both technical and business metrics.

- Tight coupling to a provider: Avoid hard-coding provider-specific formats into business logic.

- Skipping governance: Regulatory compliance and data privacy must be addressed up front, not retrofitted.

- Ignoring failure modes: Add fallbacks for model outages and clearly surface confidence levels to users.

Looking Ahead

The next generation of automation will blend specialized local models, federated learning, and more standardized AI API contracts. Expect better model explainability tools, tighter integration frameworks, and stronger regulatory scrutiny around data residency and model behavior. Organizations that pair disciplined engineering practices with clear product metrics will extract the most value while minimizing operational and security risk.

Key Takeaways

AI APIs unlock powerful automation, but success requires more than hooking a model into your stack. Start with clear business outcomes, choose an integration pattern that matches latency and throughput needs, instrument end-to-end observability, and enforce governance. For engineers, plan for idempotency, retries, and orchestration; for product teams, measure impact and costs; for security teams, balance the promise of AI-driven cybersecurity against new adversarial risks. With the right architecture and monitoring, AI APIs become reliable building blocks that scale human expertise across systems.